I`m trying to overlap the computation and memory operation with HuggingFace SwitchTransformer.

Here’s a detailed explanation.

- The memory operation is for data movement from CPU to GPU, and its size is 4MB per block.

- The number of blocks is variable (typically from 2 to 6 in total).

- The computation operation comprises several very small computation operations like GEMM, which takes 10s to 100s microseconds per each.

- I'm trying to use CudaStream, so I created two different Cuda streams and pushed memory operation and computation operation to each of them.

- But it had not been overlapped.

s_0 = torch.cuda.Stream() # Create a new stream.

s_1 = torch.cuda.Stream() # Create a new stream.

with torch.cuda.stream(s_0):

this_gate_info = router_mask, router_probs, router_logits

router_mask = router_mask.bool()

idx_mask = router_mask.transpose(1,2)

idx_mask = torch.cat(torch.split(idx_mask, 1, dim=0), dim=2)

idx_mask = idx_mask.sum(dim=2)

idx_mask = idx_mask.squeeze()

if next_blk is not None:

active_idx = torch.nonzero(idx_mask, as_tuple=True)

for idx in active_idx[0]:

tmp = getattr(next_blk.layer[-1].mlp.experts, "expert_{}".format(idx))

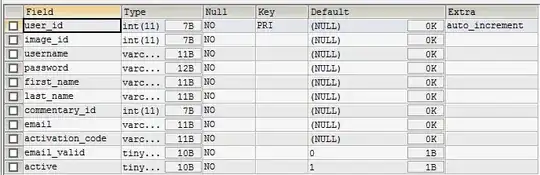

tmp.prefetching() ## THIS IS MEMORY OPERATION COLORED GREEN IN THE FIGURE

with torch.cuda.stream(s_1):

delayed_router_mask, delayed_router_probs, delayed_router_logits = delayed_gate_info

delayed_expert_index = torch.argmax(delayed_router_mask, dim=-1)

delayed_router_mask = delayed_router_mask.bool()

delayed_idx_mask = delayed_router_mask.transpose(1,2)

delayed_idx_mask = torch.cat(torch.split(delayed_idx_mask, 1, dim=0), dim=2)

delayed_idx_mask = delayed_idx_mask.sum(dim=2)

delayed_idx_mask = delayed_idx_mask.squeeze()

for idx, expert in enumerate(self.experts.values()):

if delayed_idx_mask[idx] != 0:

expert_counter = expert_counter + 1

next_states[delayed_router_mask[:, :, idx]] = expert(hidden_states[delayed_router_mask[:, :, idx]], None, None, None)

And here's my question.

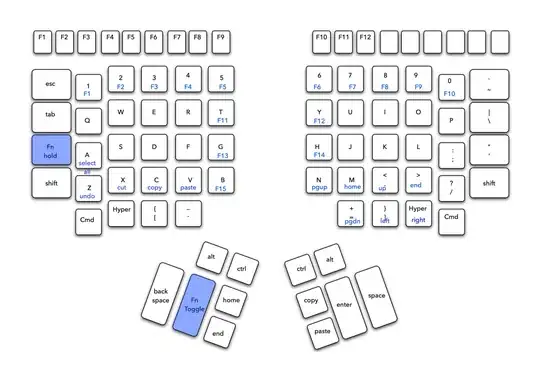

Firstly, I'd learn that to overlap the memory operation (CPU->GPU) and computation operation, the memory in the CPU should be pinned. But in my case, as can be seen in the figure, it is pageable memory, not pinned. Is it a reason that this cannot be overlapped?

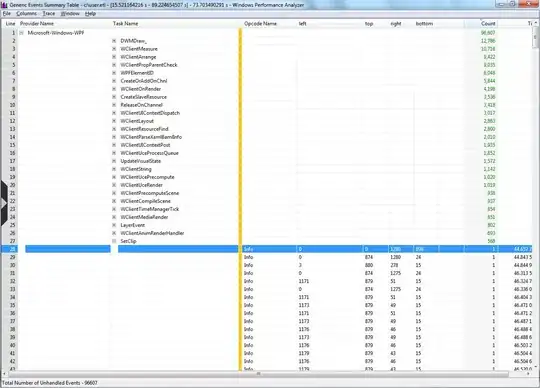

Second, I conducted an experiment to prove it with a simple example (overlapping GEMM with CPU->GPU memory operation), and here`s the output.

import torch

import torch.nn as nn

import torch.cuda.nvtx as nvtx_cuda

torch.cuda.cudart().cudaProfilerStart()

cuda = torch.device('cuda')

nvtx_cuda.range_push("STREAM INIT")

s_0 = torch.cuda.Stream() # Create a new stream.

s_1 = torch.cuda.Stream() # Create a new stream.

nvtx_cuda.range_pop()

A = torch.rand(size=(1024*4, 1024*4), device="cuda")

B = torch.rand(size=(1024*4, 1024*4), device="cuda")

C = torch.rand(size=(1024*4, 1024*4), device="cuda")

D = torch.rand(size=(1024*4, 1024*4), device="cuda")

E = torch.rand(size=(1024*4, 1024*4), device="cuda")

F = torch.rand(size=(1024*4, 1024*4), device="cuda")

a = torch.rand(size=(1024*4, 1024*4), pin_memory=False)

b = torch.rand(size=(1024*4, 1024*4), device="cuda")

iter = 10

for i in range(iter):

with torch.cuda.stream(s_0):

nvtx_cuda.range_push("S0")

C = A.matmul(B)

F = D.matmul(E)

nvtx_cuda.range_pop()

with torch.cuda.stream(s_1):

nvtx_cuda.range_push("S1")

nvtx_cuda.range_pop()

b = a.to(cuda)

torch.cuda.cudart().cudaProfilerStop()

This is pageable memory.

This is pageable memory.

This is pinned memory.

This is pinned memory.

It seems like pageable memory also can be overlapped. Then, what is the reason that my application is not overlapping?