I am creating a cell-automata, and I was doing a performance test on the code.

I did it like this:

while True:

time_1 = perf_counter_ns()

numpy.fnc1()

time_2 = perf_counter_ns()

numpy.fnc2()

time_3 = perf_counter_ns()

numpy.fnc3()

time_4 = perf_counter_ns()

numpy.fnc4()

time_5 = perf_counter_ns()

numpy.fnc5()

time_6 = perf_counter_ns()

numpy.fnc6()

time_7 = perf_counter_ns()

diff_1 = time_2 - time_1

diff_2 = time_3 - time_2

diff_3 = time_4 - time_3

diff_4 = time_5 - time_4

diff_5 = time_6 - time_5

diff_6 = time_7 - time_6

print(results)

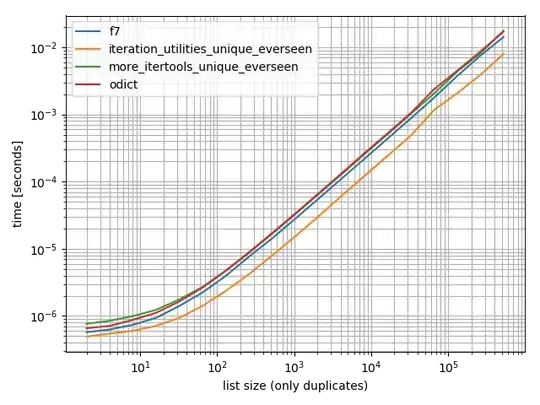

However, I found that the runtimes are somewhat inconsistent. I did some longer tests, for about 8 hours.

It seems the perfomance is "jumping around": for an hour it is running quicker, then slower, and within that hour there are smaller sections, when it is quicker, then it is slower...

What is even more disturbing, the length of the slower-quicker periods are lengthening over time. So I am pretty sure it is not a regular system-process.

The tests were conducted on debian 11 AMD Ryzen 5 2600 Six-Core Processor

The GUI was running, but no browser, etc. I monitored the overall processor usage and most of the cores did nothing.

Note that it is a cell-automata, and it evolved during the test, so the input data is not the same, it changes constantly.

However, I don't think that the time it takes to add up arrays would be different, based on the numbers in the arrays...

Also, if the performance change was data-driven, it would be very unlikely to see random data producing about the same runtimes for a whole minute...

Question: What am I seeing??? What causes it???

My hunch is that maybe it has something to do with python thread scheduling, but I don't know, if numpy uses threads and I only have 1 thread in my code...

There is at least a 2x performance difference between the "slower" and the "quicker" state on average, so it would be very beneficial to make the code stick in the "quicker" state.

I included 2 images:

One of the images is the value of diff_3, cycle-after-cycle, zoomed in more-and-more.

The other image is diff_1, diff2, ... diff_6, all in 1 image. At this scale it is difficult to see the details, but diff_3 and diff_5 are somewhat comparable. As you can see, the "quicker" and "slower" periods match up, but not exactly.

On the images there are about 6.5million cycles.