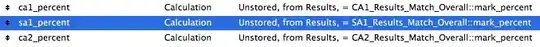

I’m working in OpenCV to take two timed images of a golf ball in flight and determine the 3-axis spin velocities of the ball based on the differences between the two images as exhibited by their dimple patterns (which are irregular enough to allow this to work). I’ve already filtered the photos to more easily identify the dimple features (as seen here, though some portions will be ignored).

I know the radius and distance of the balls from the camera, so there should be a unique 3D coordinate for every pixel in my 2D photo, as each pixel should be able to be orthogonally projected until it intersects where the surface of the ball would be in space.

I know the radius and distance of the balls from the camera, so there should be a unique 3D coordinate for every pixel in my 2D photo, as each pixel should be able to be orthogonally projected until it intersects where the surface of the ball would be in space.

My problem now is how to project the 2D points of my calibrated camera image out to where they would have been on the golf ball. If “project” is even the correct term–I’m pretty new to machine vision. ;) As above, I believe each point should be able to be projected to a corresponding real-world coordinate on the hemisphere that is facing the camera. It would be even better if I could just project the entire image in one operation, not just pixel-by-pixel. Does anyone have code or an algorithm, or even a gentle nudge in the right direction to make that happen?

Ultimately, once I have the 3D coordinates of the dimples or their centers, I want to rotate them in 3D space by various small euler angles to various candidate positions, then un-project those points back onto a 2D image (as each would look to the camera, and ignoring any rotation around the back of the ball). So hopefully this process is reversable Then, I could compare the second golf ball image to each of those candidate images to see which is closest and use the corresponding rotation angles to determine the spin. I’m basically trying to replicate the great work of these researchers in this article–-(https://www.researchgate.net/publication/313541573_Estimation_of_a_large_relative_rotation_between_two_images_of_a_fast_spinning_marker-less_golf_ball). The authors no longer have the code.

I’d appreciate any direction on this. I’ve started looking at the OpenCV sphericalWarper, and have also tried simpler registration techniques but I don’t think that’s going to work.

I’m aware of (Transforming 2D image coordinates to 3D world coordinates with z = 0) and (Computing x,y coordinate (3D) from image point). Also aware of questions involving stereo cameras and 3D to 2D projection. Thank you!