I'm trying to use the scanorama package in R and am having some troubles with the parallelization. The readme of the scanorama package links to this set of instructions for how to make sure numpy is using multiple cpus. My situation is a little different because I'm using a miniconda installation of python, but I tried to adapt this.

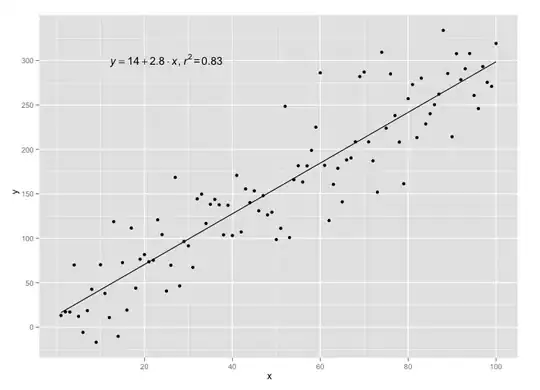

First, I created the test.py script that is listed in the above link and I ran it. When I run top to check it, I see:

Which indicates to me that it's NOT using multiple cpus. I don't have (or am not aware of the location of) the dist-packages referenced in that link, all I can find is /home/lab/miniconda3/lib/python3.7/site-packages/numpy/core, which contains:

_multiarray_tests.cpython-37m-x86_64-linux-gnu.so

_multiarray_umath.cpython-37m-x86_64-linux-gnu.so

Running ldd on both of those give me:

ldd _multiarray_tests.cpython-37m-x86_64-linux-gnu.so

linux-vdso.so.1 (0x00007ffd0bfb9000)

libc.so.6 => /lib/x86_64-linux-gnu/libc.so.6 (0x00007f6d5831c000)

/lib64/ld-linux-x86-64.so.2 (0x00007f6d5870d000)

ldd _multiarray_umath.cpython-37m-x86_64-linux-gnu.so

linux-vdso.so.1 (0x00007fff5a5a8000)

libcblas.so.3 => /home/lab/miniconda3/lib/python3.7/site-packages/numpy/core/../../../../libcblas.so.3 (0x00007f9eb1179000)

libm.so.6 => /lib/x86_64-linux-gnu/libm.so.6 (0x00007f9eb0ddb000)

libpthread.so.0 => /lib/x86_64-linux-gnu/libpthread.so.0 (0x00007f9eb0bbc000)

libc.so.6 => /lib/x86_64-linux-gnu/libc.so.6 (0x00007f9eb07cb000)

/lib64/ld-linux-x86-64.so.2 (0x00007f9eb1a4e000)

libdl.so.2 => /lib/x86_64-linux-gnu/libdl.so.2 (0x00007f9eb05c7000)

Which doesn't appear to have any sort of link to openBLAS. I installed openBLAS via conda following this link. Additionally, I tried to re-install numpy using the conda-forge version (which this link says should use openBLAS).

In python, if I check the numpy configuration, I get:

Python 3.7.10 (default, Jun 4 2021, 14:48:32)

[GCC 7.5.0] :: Anaconda, Inc. on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import numpy

>>> numpy.show_config()

blas_info:

libraries = ['cblas', 'blas', 'cblas', 'blas']

library_dirs = ['/home/lab/miniconda3/lib']

include_dirs = ['/home/lab/miniconda3/include']

language = c

define_macros = [('HAVE_CBLAS', None)]

blas_opt_info:

define_macros = [('NO_ATLAS_INFO', 1), ('HAVE_CBLAS', None)]

libraries = ['cblas', 'blas', 'cblas', 'blas']

library_dirs = ['/home/lab/miniconda3/lib']

include_dirs = ['/home/lab/miniconda3/include']

language = c

lapack_info:

libraries = ['lapack', 'blas', 'lapack', 'blas']

library_dirs = ['/home/lab/miniconda3/lib']

language = f77

lapack_opt_info:

libraries = ['lapack', 'blas', 'lapack', 'blas', 'cblas', 'blas', 'cblas', 'blas']

library_dirs = ['/home/lab/miniconda3/lib']

language = c

define_macros = [('NO_ATLAS_INFO', 1), ('HAVE_CBLAS', None)]

include_dirs = ['/home/lab/miniconda3/include']

Which I'm not entirely sure how to interpret. I can see that there is information regarding blas, but neither /home/lab/miniconda3/lib nor /home/lab/miniconda3/include contain any of the four libraries indicated above.

I also found this post and this about making sure the cpu affinities aren't overwritten. Running through those, however, it appears that all of the cpus ARE available to be used:

>>> import psutil

>>> p = psutil.Process()

>>> p.cpu_affinity()

[0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23]

>>> all_cpus = list(range(psutil.cpu_count()))

>>> p.cpu_affinity(all_cpus)

>>> p.cpu_affinity()

[0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23]

But if I run the above and then run:

import numpy as np

a = np.random.random_sample((size, size))

b = np.random.random_sample((size, size))

n = np.dot(a,b)

in the same session and check top, I'm still seeing 100% CPU usage and a small amount of memory usage.

The final thing I tried was to use python multiprocessing as described in this link. Here is how it looks in R:

# Python module loading in R

library(reticulate)

scanorama <- import("scanorama")

mp <- import("multiprocessing")

pool <- mp$Pool()

# Read in data (list of matrices and list of genes, as described in scanorama usage)

assaylist <- readRDS("./assaylist.rds")

genelist <- readRDS("./genelist.rds")

# Run the scanorama command from within pool$map

integrated.corrected.data <- pool$map(scanorama$correct(assaylist, genelist, return_dense = T, return_dimred = T), range(0,23))

Which still gives similar top results:

I know this isn't the cleanest/most straightforward question due to the interfacing between R and python, but wanted to put this out there to see if anyone had a similar experience or has any tips to point me in the right direction of figuring this out. I appreciate any feedback, thanks!