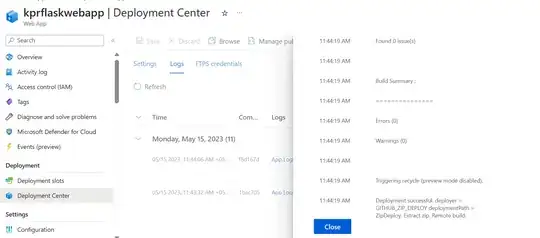

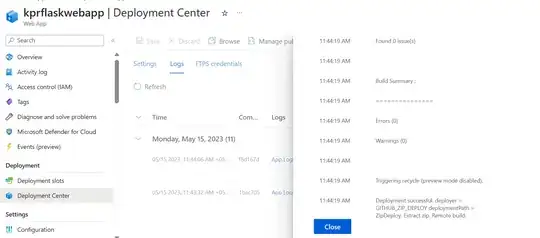

You can get your external API with HTTP trigger using Javascript function and then download that data in your blob storage or locally and add it in your cognitive search index via this Azure Search Javascript sample or Azure Rest API and Azure Portal like below:-

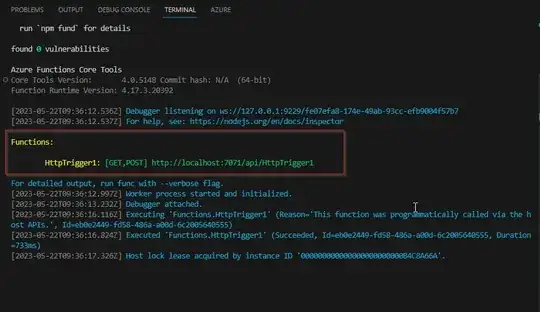

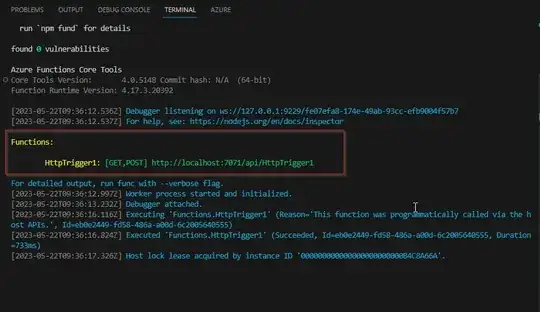

My HTTP Trigger function that gets the data from external API and gives response in the browser:-

My functions, index.js code:-

module.exports = async function (context, req) {

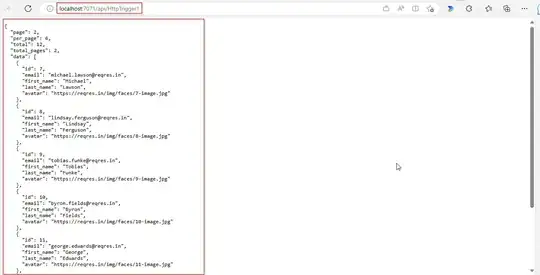

const url = 'https://reqres.in/api/users?page=2';

const response = await fetch(url);

const data = await response.json();

context.res = {

body: data

};

};

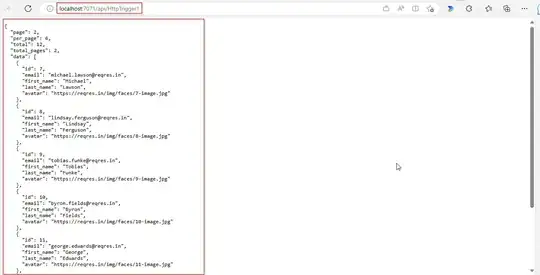

External Data:-

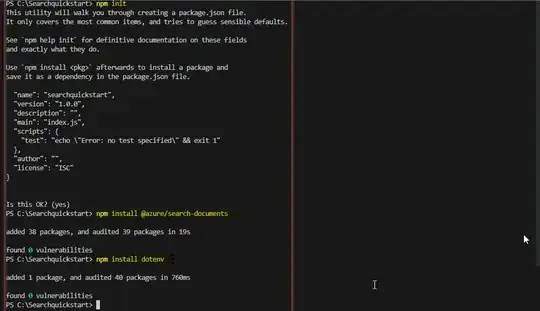

Now, I referred this Azure Search JavaScript sample to create index in cognitive search with Javascript like below:-

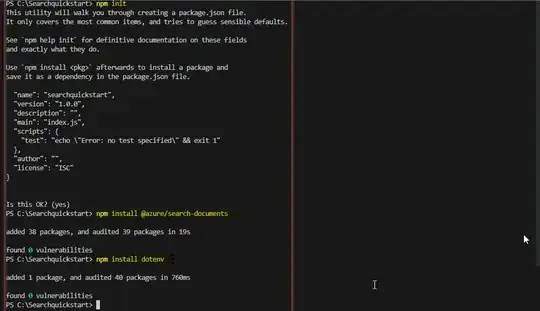

npm init

npm install @azure/search-documents

npm install dotenv

Output:-

Created search.env and added my API Key and Cognitive search endpoint like below:-

index.js:-

const { SearchIndexClient, SearchClient, AzureKeyCredential, odata } =

require("@azure/search-documents");

// Load the .env file if it exists require("dotenv").config();

// Getting endpoint and apiKey from .env file const endpoint =

process.env.SEARCH_API_ENDPOINT || ""; const apiKey =

process.env.SEARCH_API_KEY || "";

async function main() {

console.log(`Running Azure Cognitive Search JavaScript quickstart...`);

if (!endpoint || !apiKey) {

console.log("Make sure to set valid values for endpoint and apiKey with proper authorization.");

return;

}

// remaining quickstart code will go here }

main().catch((err) => {

console.error("The sample encountered an error:", err); });

Now, You can add your external API from functions to blob storage using Azure Functions Blob trigger and then import the external API's data to Search index via that Blob or directly download the data via HTTP trigger like above and add it in the Index like below:-

You can also upload the external data file to Blob by using the code in this Document.

index.js:-

const { SearchIndexClient, SearchClient, AzureKeyCredential, odata } =

require("@azure/search-documents"); const indexDefinition =

require('./searchquickindex.json'); const indexName =

indexDefinition["first_name"];

Complete source code

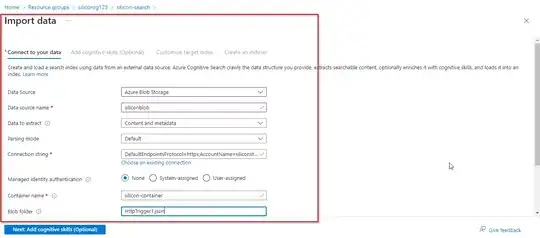

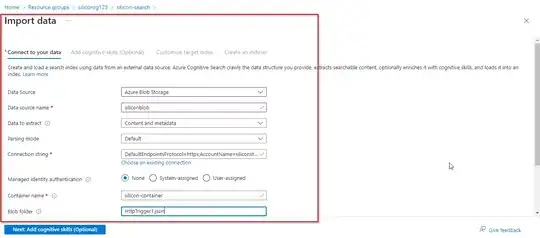

Importing the data via Blob in Portal:-

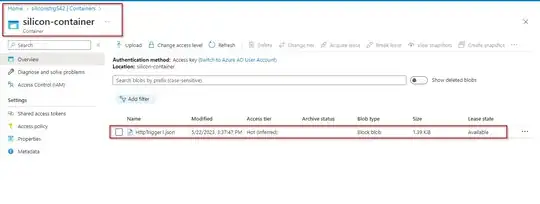

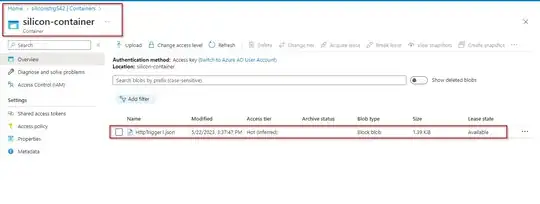

I uploaded my external data file in blob like below:-

And imported that blob in my search with Import Data like below:-