I am not using LangChain; Just using inbuilt requeests module. However, for a Production Project, consider using Flask-SSE

def open_ai_stream(prompt):

url = config["open_ai_url"] #I am reading this from a config value

#Value is this https://api.openai.com/v1/chat/completions

session = requests.Session()

payload = json.dumps({

"model": "gpt-3.5-turbo",

"messages": [

{

"role": "user",

"content": prompt

}

],

"temperature": 0,

"stream": True

})

headers = {

'Content-Type': 'application/json',

'Authorization': 'Bearer '+ config['open_ai_key']

}

with session.post(url, headers=headers, data=payload) as resp:

for line in resp.iter_lines():

if line:

yield f'data: %s\n\n' % line.decode('utf-8')

and here's the flask route

@app.route("/llm-resp", methods=["POST"])

def stream_response():

prompt = request.get_json(force = True)["prompt"]

return Response(stream_with_context(open_ai_stream(query_text)),

mimetype="text/event-stream")

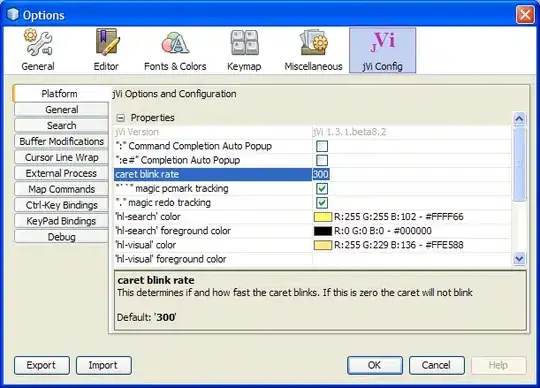

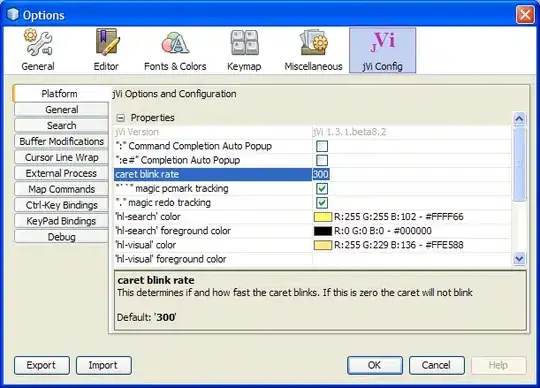

Please see Test Results (if it was a normal response, it would look quite different on postman):

EDIT: Here's a slightly better way, but there is an added dependency of openai library

def chat_gpt_helper(prompt):

"""

This function returns the response from OpenAI's Gpt3.5 turbo model using the completions API

"""

try:

resp = ''

openai.api_key = os.getenv('OPEN_API_KEY')

for chunk in openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[{

"role": "user",

"content":prompt

}],

stream=True,

):

content = chunk["choices"][0].get("delta", {}).get("content")

if content is not None:

print(content, end='')

resp += content

yield f'data: %s\n\n' % resp

except Exception as e:

print(e)

return str(e)

Rest all would stay the same.

Check the full code here:

EDIT 2:

In case someone is not able to see responses in chunks on Postman, make sure your Postman is up to date since at the time of writing, this seems to be a new feature. Relevant press release