I am trying to understand the use of Keras tuner in obtaining optimal value of hyperparameters for a simple MLP model. The code that I am using is as follows:

def build_model2(hp):

model = tf.keras.Sequential()

for i in range(hp.Int('layers', 2, 6)):

model.add(tf.keras.layers.Dense(units=hp.Int('units_' + str(i), 32, 512, step=128),

activation = hp.Choice('act_' + str(i), ['relu', 'sigmoid','tanh'])))

model.add(Flatten())

model.add(layers.Dense(5, activation='softmax'))

learning_rate = hp.Float("lr", min_value=1e-4, max_value=1e-2, sampling="log")

model.compile(keras.optimizers.Adam(learning_rate=learning_rate), loss = 'categorical_crossentropy', metrics = ['accuracy'])

return model

tuner2 = RandomSearch(build_model2, objective = 'val_accuracy', max_trials = 5,

executions_per_trial = 3, overwrite=True)

tuner2.search_space_summary()

tuner2.search(X_train, Y_train, epochs=25, validation_data=(X_train, Y_train),verbose = 1)

tuner2.results_summary()

# Get the optimal hyperparameters

best_hps=tuner2.get_best_hyperparameters(num_trials=1)[0]

print("The optimal parameters are:")

print(best_hps.values)

# Build the model with the optimal hyperparameters and train it on the data for 50 epochs

model = tuner2.hypermodel.build(best_hps)

history = model.fit(X_train, Y_train, epochs=50, validation_split=0.2)

val_acc_per_epoch = history.history['val_accuracy']

best_epoch = val_acc_per_epoch.index(max(val_acc_per_epoch)) + 1

print('Best epoch: %d' % (best_epoch,))

hypermodel = tuner2.hypermodel.build(best_hps)

# Retrain the model

hypermodel.fit(X_train, Y_train, epochs=best_epoch)

eval_result = hypermodel.evaluate(X_test, Y_test)

print("[test loss, test accuracy]:", eval_result)

The paramters that I am tuning are: Number of hidden layers (2 - 6), number of neurons in the hidden layer (min = 32, max = 512, step size = 128), activtion function ('relu', 'sigmoid','tanh') and learning rate (min_value=1e-4, max_value=1e-2, sampling="log").

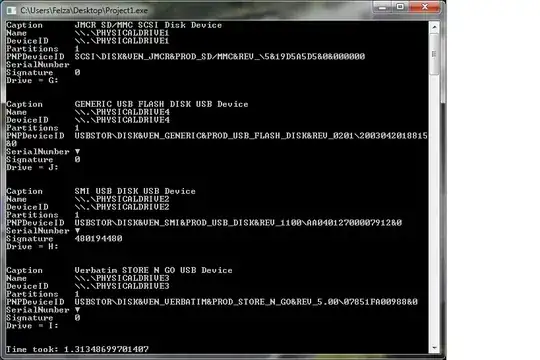

For different combinations of the above parameters, I obtained different values as shown below:

I have the following doubts:

- How can I conclude that a particular combination is giving me the most optimal value?

- If the optimal numbers of hidden layers are say 2, then also why do I get values for unit_2, unit_3 and unit_4?

- If I repeat the simulation again the values of the hyperparameters change, then how should I conclude that a particular combination is optimal?