I'm learning Scikit Learn on Python, but I have problems in clustering data in straight lines. I am using the datasauRus dozen dataset.

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from sklearn.mixture import GaussianMixture

from sklearn.neighbors import KNeighborsClassifier

from sklearn.model_selection import train_test_split

datasaurus=pd.read_csv("Test\datasaurus.csv")

dataset="slant_down"

clust=5

color=["r","b","g","y","m","k"]

df=datasaurus.where(datasaurus["dataset"]==dataset,inplace=False).dropna()

X=df["x"].to_numpy()

y=df["y"].to_numpy()

Here I just imported the data.

#Scale

scale_X=X-min(X)

scale_y=y-min(y)

scale_X/=max(scale_X)

scale_y/=max(scale_y)

#Zip data

X_train, X_test, y_train, y_test = train_test_split(scale_X, scale_y,random_state=42)

zip_train=np.array(list(zip(X_train,y_train)))

zip_data=np.array(list(zip(scale_X,scale_y)))

Scaled and zipped them.

#Gaussian

gauss=GaussianMixture(n_components=clust, random_state=0)

gauss.fit(zip_train)

pred=gauss.predict(zip_data)

for i in range(len(pred)):

plt.scatter(X[i],y[i],c=color[pred[i]])

plt.show()

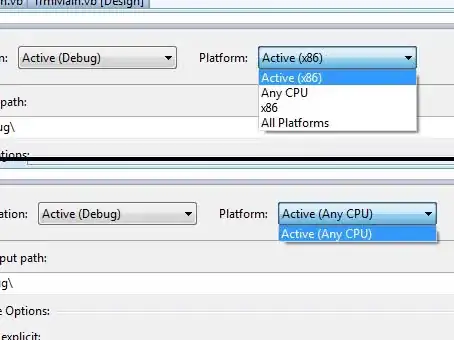

And then I used GaussianMixture to clust them. With the dataset slant_down I don't have many problems:

There is an error in the bottom right but it's easy to solve it with KNeighborsClassifier, so it's not a real problem.

The code works pretty well with slant_down and also with v_lines (clust=4):

But it completely fails with h_lines and slant_up:

I really don't know why this happens, the dataset are pretty much the same, just rotated. I think it's an overfitting problem, but I can't figure out where I am causing it.