As a follow-up to my previous question, I'm interested in improving the performance of the existing recursive sampling function.

By recursive sampling I mean randomly choosing up to n unique unexposed IDs for a given exposed ID, and the randomly choosing up to n unique unexposed IDs from the remaining unexposed IDs for another exposed ID. If there are no remaining unexposed IDs for a given exposed ID, then the exposed ID is left out.

The original function is as follows:

recursive_sample <- function(data, n) {

groups <- unique(data[["exposed"]])

out <- data.frame(exposed = character(), unexposed = character())

for (group in groups) {

chosen <- data %>%

filter(exposed == group,

!unexposed %in% out$unexposed) %>%

group_by(unexposed) %>%

slice(1) %>%

ungroup() %>%

sample_n(size = min(n, nrow(.)))

out <- rbind(out, chosen)

}

out

}

I was able to create a more efficient one as follows:

recursive_sample2 <- function(data, n) {

groups <- unique(data[["exposed"]])

out <- tibble(exposed = integer(), unexposed = integer())

for (group in groups) {

chosen <- data %>%

filter(exposed == group,

!unexposed %in% out$unexposed) %>%

filter(!duplicated(unexposed)) %>%

sample_n(size = min(n, nrow(.)))

out <- bind_rows(out, chosen)

}

out

}

Sample data and bechmarking:

set.seed(123)

df <- tibble(exposed = rep(1:100, each = 100),

unexposed = sample(1:7000, 10000, replace = TRUE))

microbenchmark(f1 = recursive_sample(df, 5),

f2 = recursive_sample2(df, 5),

times = 10)

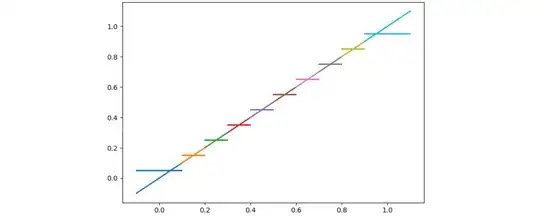

Unit: milliseconds

expr min lq mean median uq max neval cld

f1 1307.7198 1316.5276 1379.0533 1371.3952 1416.6360 1540.955 10 b

f2 839.0086 865.2547 914.8327 901.2288 970.9518 1036.170 10 a

However, for my actual dataset, I would need an even more efficient (i.e., quicker) function. Any ideas for a more efficient version, whether in data.table, involving parallelisation or other approaches are welcome.