I wrote a small python script using diplib to measure the center and the roation angle of our product. I wise the output should be as precise as possible since I relied on this to drive an industrial PNP machine to precisely place a panel on it. Here is my code

import diplib as dip

import numpy as np

import matplotlib.pyplot as pp

# load

img = dip.ImageRead('back6.png')

img = img(1)

gm = dip.Norm(dip.GradientMagnitude(img))

water = dip.Watershed(gm, connectivity=1,maxDepth=6,flags={'correct', 'labels'})

water = dip.SmallObjectsRemove(water, 8000)

blocks = dip.MeasurementTool.Measure(water, img, features=['Mass'])

blocks = dip.ObjectToMeasurement(water, blocks['Mass'])

blocks[blocks < 31000000] = 0

result = dip.MeasurementTool.Measure(water, img, features=['Center'])

print(result)

board = (blocks > 3100000)

board = dip.FillHoles(board)

rect = dip.Opening(board, 35)

dip.viewer.Show(board)

dip.viewer.Show(rect)

result = dip.MeasurementTool.Measure(dip.Label(board), img, features=['Center', 'Feret'])

print(result)

result = dip.MeasurementTool.Measure(dip.Label(rect), img, features=['Center', 'Feret'])

print(result)

target = dip.Overlay(img, rect-dip.BinaryErosion(rect, 1, 1))

dip.viewer.Show(target)

dip.viewer.Show(dip.Overlay(img, board-dip.BinaryErosion(board, 1, 1)))

circle = dip.Image(target.Sizes(), 1, 'SFLOAT')

circle.Fill(0)

dip.DrawBandlimitedBall(circle, diameter=10, origin=result[1]['Center'])

circle /= dip.Maximum(circle)

target *= 1 - circle

target += circle * dip.Create0D([255,10,0])

dip.viewer.Show(target)

This code tracing the contour looks good and the center measurement is stable

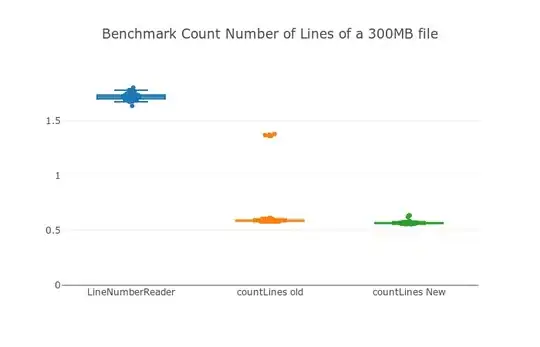

Then I use 'Feret.MinAng' to measure the rotation angle, but according to the issue on github it will find the two pixels in either side of the object that are farthest away from each other as the width, rather than finding the average distance between opposite sides, you said that Feret is bias. This makes me extremely worried about the repeatability of my code. So I apply an morphological opening on the target round corner rectangle, and the 'Feret.MinAng' show about 0.05 degree error. See the following screenshot

How can I make the rotation angle measurement stable and consistence with slightly light source variance?