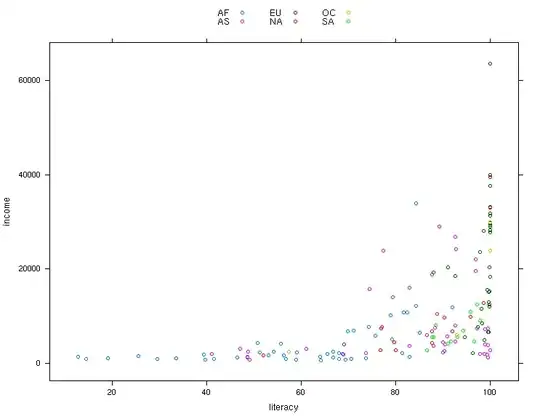

I am currently writing an OpenCV program to recognize and translate Braille characters into Spanish. For the time being, I can recognize the Braille points and retrieve their centroids successfully.

In order to divide the points into characters, I am currently drawing lines along the x and y axis of each braille point, creating some sort of visual matrix:

num_labels, labels, centroid_stats, centroids = cv.connectedComponentsWithStats(canny_edges, connectivity=8)

sorted_centroids = sorted(centroids[1:], key=lambda x: (x[1], x[0]))

copied_image = img_show.copy()

white_canvas = np.ones_like(copied_image)

white_canvas[:] = (255, 255, 255)

lines_canvas = white_canvas.copy()

width, height, _ = copied_image.shape

for centroid in sorted_centroids:

x, y = int(round(centroid[0])), int(round(centroid[1]))

cv.circle(copied_image, (x, y), radius=1, color=(255,0,0), thickness=-1)

cv.line(lines_canvas, (x, 0), (x, height*100000), color=(0,0,0), thickness=2)

cv.line(lines_canvas, (0, y), (width*10000000, y), color=(0,0,0), thickness=2)

plt.imshow(copied_image)

plt.title('Braille con Centroides Marcados')

plt.axis('off')

plt.show()

plt.imshow(lines_canvas)

plt.title('Braille con Líneas de Cuadrícula')

plt.axis('off')

plt.show()

Some points are not completely aligned so I used some thresholding to merge the lines that are very close together:

lines_grayscale = cv.cvtColor(lines_canvas, cv.COLOR_BGR2GRAY)

lines_bilateral_filter = cv.bilateralFilter(lines_grayscale, 13, 75, 75)

lines_adaptive_thres = cv.adaptiveThreshold(lines_bilateral_filter, 255, cv.ADAPTIVE_THRESH_GAUSSIAN_C, cv.THRESH_BINARY, 11, 2)

_, lines_binary_threshold = cv.threshold(lines_adaptive_thres,0,255,cv.THRESH_BINARY+cv.THRESH_OTSU)

lines_bitwise_threshold = cv.bitwise_not(lines_binary_threshold)

plt.imshow(lines_bitwise_threshold, cmap='gray')

plt.title('Braille con Líneas de Cuadrícula Umbralizadas')

plt.axis('off')

plt.show()

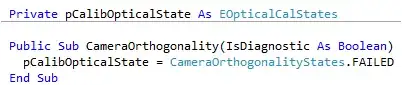

I am trying to use Probabilistic Hough Line transform to recognize the lines and retrieve the intersections, but I am having a bit of a problem:

result = img_show.copy()

lines = cv.HoughLinesP(lines_bitwise_threshold, 1, np.pi / 180, 50, None, 50, 10)

if lines is not None:

for i in range(0, len(lines)):

l = lines[i][0]

cv.line(result, (l[0], l[1]), (l[2], l[3]), (0,0,255), 1, cv.LINE_AA)

plt.imshow(result)

plt.title('Braille con Líneas Hough')

plt.axis('off')

plt.show()

As you can see, it only recognizes horizontal lines. If I reduce the threshold and minLineGap, I get the vertical lines, but the horizontal lines get drawn together. This is how it looks if I reduce minLineGap and threshold to 1:

A higher minLineGap means less vertical lines are recognized, a higher threshold means vertical lines being drawn like a bunch of horizontal lines:

I don't know if I should change parameters in the thresholding process or use a completely different process altogether to segment the Braille characters.

Edit:

I have taken another approach by aligning and redrawing contours by vertical alignment. This way I believe it is easier to calculate the segmentation dimensions by calculating distances between glyphs:

colors = defaultdict(lambda: (np.random.randint(0, 220), np.random.randint(0, 220), np.random.randint(0, 220)))

column_coors = defaultdict(lambda: set())

dimensions = set()

for countours in filtered_contours:

center = cv.moments(countours)

countour_width, countour_height = cv.boundingRect(countours)[2:]

x , y = int(center["m10"] / center["m00"]), int(center["m01"] / center["m00"])

already_added = [column_coors.get(x-1), column_coors.get(x+1)]

if already_added[0] or already_added[1]:

shift_value = -1 if already_added[0] else 1

x = x + shift_value

contours = np.array([[[c[0][0] + shift_value, c[0][1]]] for c in countours])

if (x, y) not in column_coors[x]:

column_coors[x].add((x, y))

dimensions.add((countour_width, countour_height))

color = colors[x] if x in colors else colors[x - 1] if x - 1 in colors else colors[x + 1]

cv.drawContours(rotated_image, [countours], -1, color, 1)

# sort y values (rows) from upper to lower within the same x value (column)

for x in column_coors.keys():

column_coors[x] = sorted(column_coors[x], key=lambda c: c[1])

> Column coordinates: defaultdict(<function <lambda> at 0x7fdead080a40>,

> {17: [(17, 15), (17, 39)], 29: [(29, 15)], 100: [(100, 17)], 112:

> [(112, 29)], 208: [(208, 17), (208, 28)], 220: [(220, 16), (220, 29)],

> 289: [(289, 16), (289, 40)], 301: [(301, 40)], 372: [(372, 27), (372,

> 39)], 385: [(385, 15)], 455: [(455, 27), (455, 39)], 467: [(467, 15),

> (467, 27)], 537: [(537, 17)], 645: [(645, 15), (645, 27), (645, 39)],

> 727: [(727, 17)], 833: [(833, 17)], 845: [(845, 17)], 917: [(917,

> 17)], 998: [(998, 15), (998, 27)], 1010: [(1010, 15), (1010, 27),

> (1010, 39)], 1081: [(1081, 17)]}) Glyphs Bounding Rect Dimensions:

> {(4, 4), (5, 5), (7, 7), (6, 5), (6, 7), (5, 6), (6, 6)}

Edit 2:

I was asked as to which research papers I am basing my tries in segmenting the Braille characters:

- Hugh Lines (Section II A)

- Fixed distance segmentation (Section 2.4)