The behavior you describe is correct.

If you create a private serial queue, regardless of its target, it will perform the tasks serially. The concurrent nature of the target queue does not affect the serial nature of your private queue.

If you create a private concurrent queue, if the target is serial, your private queue will be constrained to serial behavior of the underlying target queue.

This begs the question of what is the purpose of a target queue.

A good example would be an “exclusion queue”. See WWDC 2017 video Modernizing Grand Central Dispatch Usage. The idea is that you might have two separate serial queues, but you want them to run exclusively of each other, so you would create yet another serial queue, and use that as the target for the other two. It avoids unnecessary context switches, ensures serial behaviors across multiple queues, etc. See that video for more information.

Loosely related, see the setTarget(_:) documentation, which offers a little context for targets:

The target queue defines where blocks run, but it doesn't change the semantics of the current queue. Blocks submitted to a serial queue still execute serially, even if the underlying target queue is concurrent. In addition, you can't create concurrency where none exists. If a queue and its target queue are both serial, submitting blocks to both queues doesn't cause those blocks to run concurrently. The blocks still run serially in the order the target queue receives them.

By the way, five iterations may go quickly enough that you may not see parallel execution, even with a concurrent queue:

let queue = DispatchQueue(label: "concurrentQueue", attributes: .concurrent)

queue.async {

for i in 1...5 {

print(i)

}

}

queue.async {

for i in 6...10 {

print(i)

}

}

That will often still misleadingly lead you to conclude that there is still serial execution:

1

2

3

4

5

6

7

8

9

10

It actually is concurrent, but the first block finishes before the second one gets a chance to start!

You may have to introduce a little delay to manifest the concurrent execution. While you should practically never Thread.sleep in real code, for illustrative purposes, it slows it down enough to demonstrate the concurrent execution:

let queue = DispatchQueue(label: "concurrentQueue", attributes: .concurrent)

queue.async {

for i in 1...5 {

print(i)

Thread.sleep(forTimeInterval: 1)

}

}

queue.async {

for i in 6...10 {

print(i)

Thread.sleep(forTimeInterval: 1)

}

}

That yields:

1

6

7

2

8

3

4

9

5

10

Or, if you are ambitious, you can use “Instruments” » “Time Profiler” with the following code:

import os.log

let poi = OSLog(subsystem: "Demo", category: .pointsOfInterest)

And then:

let queue = DispatchQueue(label: "concurrentQueue", attributes: .concurrent)

queue.async {

for i in 1...5 {

let id = OSSignpostID(log: poi)

os_signpost(.begin, log: poi, name: "first", signpostID: id, "Start %d", i)

print(i)

Thread.sleep(forTimeInterval: 1)

os_signpost(.end, log: poi, name: "first", signpostID: id, "End %d", i)

}

}

queue.async {

for i in 6...10 {

let id = OSSignpostID(log: poi)

os_signpost(.begin, log: poi, name: "second", signpostID: id, "Start %d", i)

print(i)

Thread.sleep(forTimeInterval: 1)

os_signpost(.end, log: poi, name: "second", signpostID: id, "End %d", i)

}

}

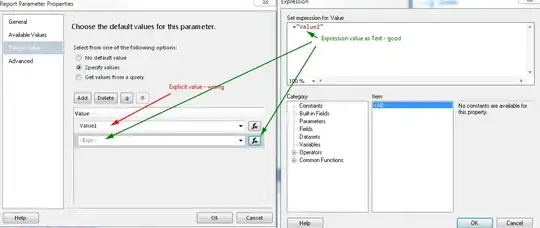

And you can visually see the parallel execution if you use Xcode’s “Product” » “Profile” » “Time Profiler”:

For more information see How to identify key events in Xcode Instruments?

But, again, avoid Thread.sleep, but just make sure you are doing enough in the loop to manifest the parallel execution.