I have some level of understanding of how the memory is allocated and garbage collected in C#. But I'm missing some clarity on GC even after reading multiple articles and trying out few test c# programs. To simplify the ask, I have created as dummy ASP core Web API project with a DummyPojo class which holds one int property and DummyClass which creates million object of the DummyPojo. Even after running forced GC the dead objects are not collected. Can someone please throw some light on this.

public class DummyPojo

{

public int MyInt { get; set; }

~DummyPojo() {

//Debug.WriteLine("Finalizer");

}

}

public class DummyClass

{

public async Task TestObjectsMemory()

{

await Task.Delay(1000 * 10);

CreatePerishableObjects();

await Task.Delay(1000 * 10);

GC.Collect();

}

private void CreatePerishableObjects()

{

for (int i = 0; i < 100000; i++)

{

DummyPojo obj = new() { MyInt = i };

}

}

}

Then in my program.cs file I just called the method to start creating objects.

DummyClass dc = new DummyClass();

_ = dc.TestObjectsMemory();

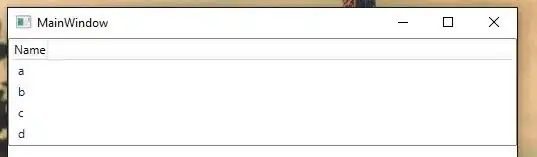

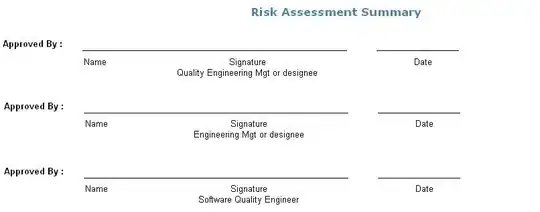

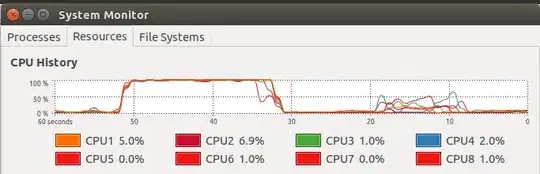

I ran the program and took 3 memory snapshots with three 10 seconds wait each using visual studio's memory diagnostic tool, one at before creating objects, second one after creating object and the last one is after triggering GC.

My questions are:

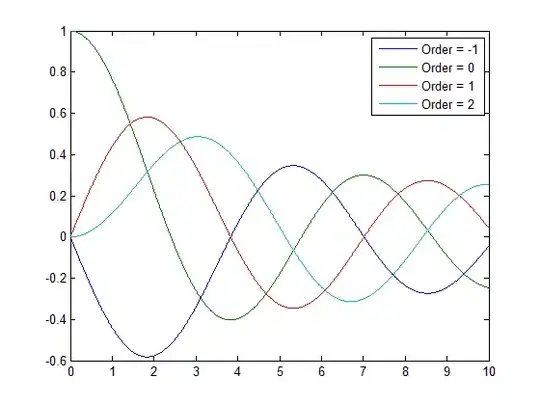

Why is memory usage not going down to the original size? I understand that GC runs only when it needed only like memory crunch. In my case I triggered the GC and I see finalizers also getting called. I was expecting the GC to collect dead objects and compact the memory. As per my understanding this is nothing to do with LOH as I did not use any large objects or arrays. Please look at the below images for each snapshot and GC numbers.

- The 2nd snapshot shows 118MB (4MB jump from 114MB) is due to dead objects?

- why it jumped to 125MB in snapshot3 even after the forced GC instead of going back to 114MB.

While memory keep increasing, the snapshots differences shows in green color which indicates memory decrease. why would it show the opposite?

when I opened the snapshot#3 I see DummyPojo dead objects. Shouldn't it be collected by the forced GC?