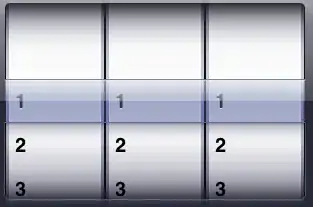

The idea is to isolate the smallest area around the values via cropping:

You can then use the x0 position of each word as your vertical line.

You can pass the lines to table settings via explicit_vertical_lines which will give back empty strings for the "blank" cells.

col1.extract_text()='406831 DJ20N\n1000 NERO'

MBELT W.40 GG MAR DOLLAR PIGPRINT

['60', '65', '70', '75', '80', '85', '90', '95', '100', '105', '110', '115', '120']

['', '', '0', '0', '2', '6', '10', '10', '10', '2', '2', '', '']

col3.extract_text()='42 218.00 9,15\n9,156.0'

col1.extract_text()='414516 0YA0G\n1000 BLACK'

MBELT W.30 GG MAR. PLUTONE CALF

['65', '70', '75', '80', '85', '90', '95', '100', '105', '110', '115', '120', '135']

['0', '0', '0', '2', '6', '15', '15', '15', '2', '2', '0', '0', '']

col3.extract_text()='57 205.00 11,6\n11,685.0'

col1.extract_text()='406831 0YA0G\n1000 BLACK'

MBELT W.40 GG MAR PLUTONE CALF

['60', '65', '70', '75', '80', '85', '90', '95', '100', '105', '110', '115', '120']

['', '', '', '', '', '2', '2', '2', '2', '2', '1', '', '']

col3.extract_text()='11 218.00 2,39\n2,398.0'

col1.extract_text()='627055 92TIN\n9769 B.EBONY/NERO'

MBELT W.37GG M.R T.GG SUPREME/PLUTONE CALF

['60', '65', '70', '75', '80', '85', '90', '95', '100', '105', '110', '115', '120']

['', '', '', '', '', '3', '3', '3', '3', '3', '1', '', '']

col3.extract_text()='16 244.00 3,90\n3,904.0'

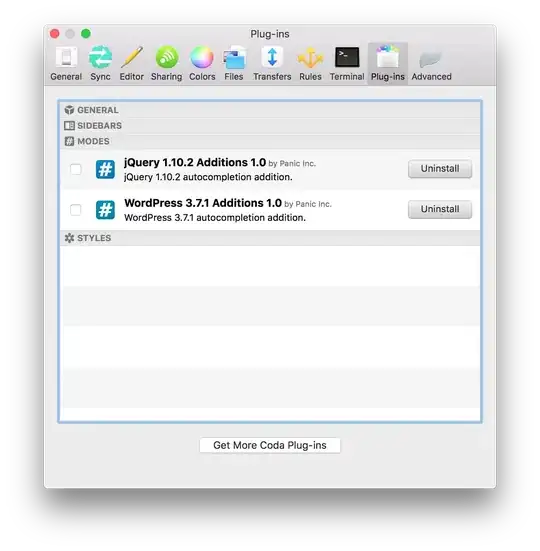

There are various ways you could approach this, but the steps I've used here are:

- use Description header line to identify the "top" of the rows if present

- split into rows based on the thick/dark horizontal lines

- split each row into columns based on vertical lines within row

- use the method above for creating a table from column 2

These are the horizontal lines we can use to split into rows:

These are the vertical lines we can use to divide the columns:

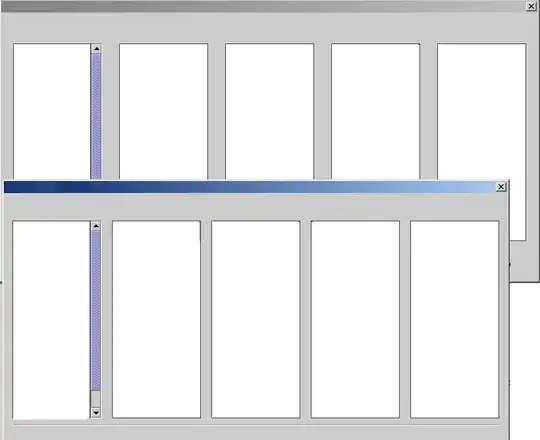

import itertools

# First thick horizontal line that span > width % of page

product_line = next(

line for line in page.horizontal_edges

if line['orientation'] == 'h'

and line['linewidth'] > 1

and line['width'] > page.width / 1.25

)

# Search for Description

description = page.search('Description/Size Quantity Qty Price Value')

has_description = len(description) > 0

# If there is a description we crop there, else we use the line divider

if has_description:

description = description[0]

product_area_top = description['bottom'] + 10

product_area = page.crop(

(product_line['x0'], product_area_top, product_line['x1'], page.height)

)

else:

product_area_top = product_line['top']

product_area = page.crop(

(product_line['x0'], product_area_top, product_line['x1'], page.height)

)

# find horizontal lines for rows

hlines = [

line['top'] for line in product_area.edges

if line['orientation'] == 'h'

and line['stroking_color'] == (0, 0, 0)

and line['width'] > product_area.width / 1.25

]

# If there is no description on the page we need to add in the top as the first line (in order to extract row 1)

if has_description is False:

hlines = [product_area_top] + hlines

# Make sure our lines are sorted from top -> bottom

hlines = sorted(set(hlines))

for top, bottom in itertools.pairwise(hlines):

row = product_area.crop(

(product_area.bbox[0], top, product_area.width, bottom)

)

# vertical lines to create columns

vlines = [

line['x0'] for line in row.vertical_edges

if line['object_type'] == 'line'

]

# we need to add an end line to extract last column

vlines = sorted(vlines + [row.width])

col1 = row.crop((vlines[0], top, vlines[1], bottom))

col2 = row.crop((vlines[1], top, vlines[2], bottom))

col3 = row.crop((vlines[2], top, vlines[3], bottom))

lines = col2.extract_text_lines()

# lines 1-2 are the values, use their positions to crop

bbox = lines[1]['x0'], lines[1]['top'], lines[-1]['x1'], lines[-2]['bottom']

values = col2.crop(bbox)

# use start of each word as a vertical line edge

vlines = [word['x0'] for word in values.extract_words()]

table = values.extract_table(dict(

explicit_vertical_lines = vlines

))

print(f'{col1.extract_text()=}')

print(lines[0]['text'], table[0], table[1], sep='\n')

print(f'{col3.extract_text()=}')

You may be able to extract the other values simply from the text extraction methods, or you could use a similar cropping technique on col1, col3.