In order to copy data from azure cosmos dB - mongo dB Api to azure blob storage incrementally, you need to maintain the watermark table. This table will have the timestamp value of the last pipeline run. If you store the watermark table in mongo dB API, ADF doesn't have option to query it. Thus, I am taking the watermark table in azure SQL database to store this.

Initially, value is stored as 1900-01-01 in watermark table.

Steps to be followed in ADF:

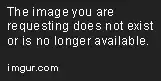

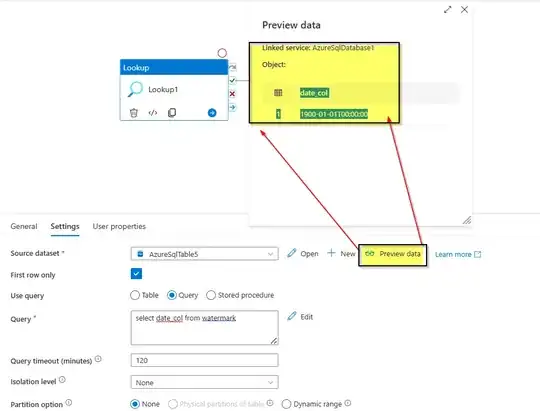

- Take the lookup activity with the dataset pointing to watermark table. Give the query as

select date_col from watermark

- Then take the copy activity next to lookup activity. Take the source dataset for mongo DB. In filter, type the following to filter the rows greater than watermark table value.

{"created_date":{$gt:@{activity('Lookup1').output.firstRow.date_col}}

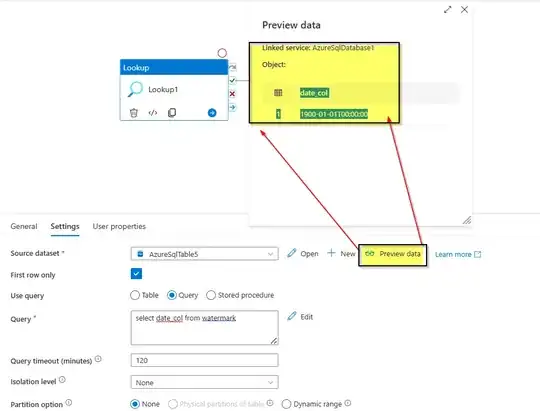

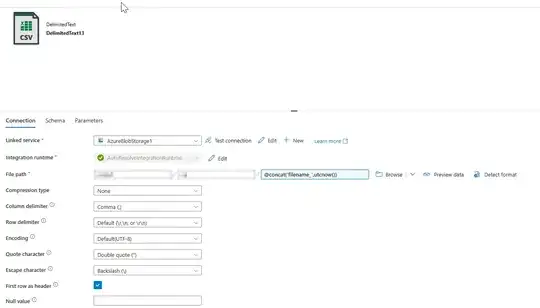

- Create a sink dataset and keep the filename as dynamic.

@concat('filename_',utcnow()). This will concat the filename with the datetime at when it got created.

- Take the script activity after copy activity and give the query as,

update watermark

set date_col='@{utcNow()}'

This will update the watermark table with current UTC. Thus, in next pipeline run, any rows which are created after the current UTC in mongo dB API, that will be copied to new file.