I would like to measure the execution time of various code snippets in Python using Jupyter notebooks. Jupyter notebooks offer the %timeit and %%timeit magic to measure the execution time of a cell.

In the Jupyter documentation it states

-r<R>: number of repeats <R>, each consisting of <N> loops, and take the best result. Default: 7

Which would indicate the best-of-N should be reported, which is in line with general profiling best practices. The timeit.repeat documentation states this explicitly:

Note: It’s tempting to calculate mean and standard deviation from the result vector and report these. However, this is not very useful. In a typical case, the lowest value gives a lower bound for how fast your machine can run the given code snippet; higher values in the result vector are typically not caused by variability in Python’s speed, but by other processes interfering with your timing accuracy. So the

min()of the result is probably the only number you should be interested in. After that, you should look at the entire vector and apply common sense rather than statistics.

However when we execute a cell in Jupyter with the %timeit magic, it reports mean ± std:

In [1]: %timeit pass

8.26 ns ± 0.12 ns per loop (mean ± std. dev. of 7 runs, 100000000 loops each)

In [2]: u = None

In [3]: %timeit u is None

29.9 ns ± 0.643 ns per loop (mean ± std. dev. of 7 runs, 10000000 loops each)

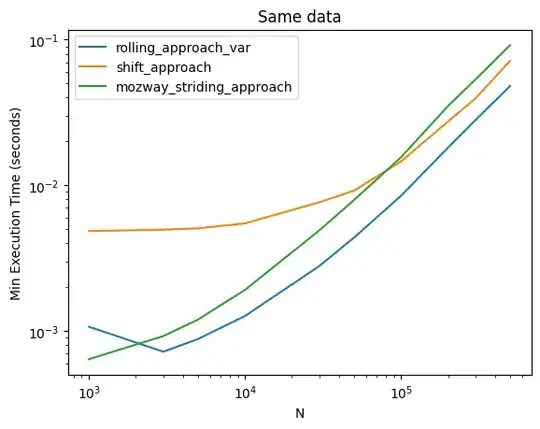

There is a significant difference between taking the mean of multiple runs and taking the min:

for approach in approaches:

function = partial(approach, *data)

times = timeit.Timer(function).repeat(repeat=number_of_repetitions, number=10)

approach_times_min[approach].append(min(times))

approach_times_mean[approach].append(mean(times))

This can lead to wrong conclusions.

Why is the behaviour of %timeit different than what is stated in the documentation? Can I change a setting to make it report the minimum instead?