I am currently working on extracting data from sensors during the operation of a machine using a large time-series data.

The data can be accessed through either a Google Sheets link or a Dropbox link.

The machine undergoes two distinct movements: upward and downward. I have a data frame that includes the following variables:

- Dates (millisecondes included)

- Sensor 1

- Sensor 2

- UP: Indicates a value of 1 when the machine is moving up and -1 when it doesn't

- DOWN: Indicates a value of 1 when the machine is moving down and -1 when it doesn't

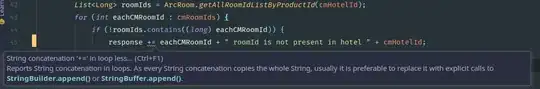

While I have been able to successfully extract data using the "UP/DOWN" variables to identify machine movement, I am currently encountering an issue due to the presence of time lags or shifts in these variables. The inconsistency arises from the fact that the machine initiates its upward or downward movement prior to the "UP" or "DOWN" variables indicating the corresponding direction.

To illustrate the problem visually, please refer to the graph below:

My objective is to find a solution that allows me to extract the entire movement (UP/DOWN) from the beginning to the end without losing any data.

The solution could be in R or Python. Thanks in advance.