I have some very large datasets and part of the analysis pipeline is to determine, as the title says, whether each point is bound by a simplex in n dimensions. I'm trying to find a way to calculate this fast without parallelisation if possible. One of the hurdles is that the dimensionality of the datasets varies, so the solution needs to be apply to any dimensions, rather than being fixed at 2D or 3D, for example.

However, for simplicity's sake, I have used 2D examples as they are easy to represent, but in theory, the maths should hold.

Barycentric Coordinates

My initial thought was to use barycentric coordinates, converting from cartesians, as is done here but my implementation of this method proves to be untrustworthy to say the least:

import numpy as np

import matplotlib.pyplot as plt

def is_in_simplex(point, T_inv, simplex):

first_n = np.matmul(

T_inv, (point - simplex[-1])

)

last = 1 - np.sum(first_n)

bary = np.concatenate((first_n, [last]))

return np.all((bary <= 1) & (bary >= 0))

# setup

simplex = np.array([[0, 0,], [8, 8,], [10, 3]])

rng = np.random.default_rng()

test_points = rng.random((10, 2))*10

# Maths starts here

T = np.array(simplex[:-1] - simplex[-1]).T

T_inv = np.linalg.inv(T)

within = np.vectorize(is_in_simplex, excluded={1, 2})(test_points, T_inv, simplex)

# drawing

polygon = np.concatenate([simplex, np.array([simplex[0]])])

print()

plt.plot(*polygon.T)

plt.scatter(*test_points.T)

for i, p in enumerate(test_points, 0):

print(f"{i}\t{p}\t{test_points[i]}\t{within[i]}")

plt.annotate(i, p)

And the output of this is:

0 [4.15391239 4.85852344] [4.15391239 4.85852344] [ True True]

1 [5.24829898 9.22879891] [5.24829898 9.22879891] [ True False]

2 [3.31255765 0.75891285] [3.31255765 0.75891285] [ True True]

3 [3.67468612 1.30045647] [3.67468612 1.30045647] [ True True]

4 [9.95049042 5.932782 ] [9.95049042 5.932782 ] [False True]

5 [8.42621723 6.35824573] [8.42621723 6.35824573] [False True]

6 [4.19569122 3.41275362] [4.19569122 3.41275362] [ True True]

7 [1.57324033 8.00273677] [1.57324033 8.00273677] [False False]

8 [1.9183791 0.54945207] [1.9183791 0.54945207] [ True True]

9 [0.52448473 7.77920839] [0.52448473 7.77920839] [False True]

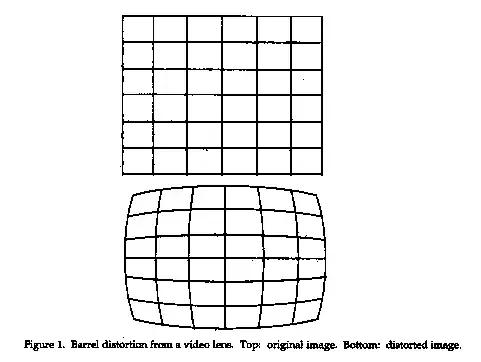

The first column is the index, the second are the cartesian coordinates, the third should be first two barycentric coordinates (should assume they add to 1) and the fourth column should show whether the point lies within the simplex or not.

As you may have noticed, there are a few things wrong. Points 3, 5 and 6 should be labelled as within the simplex, but their barycentric coordinates are completely wrong. As they are bound by the simplex, the barycentric coordinates should be greater than 0 but sum to 1. And the the output of is_in_simplex() is an array, whereas it should be a single boolean for each point.

Not including the RNG, printing and plotting, this takes 0.0383 seconds for ten points, 0.0487 for 100, 0.0994 for 1,000 and 0.523 for 10,000.

Linear Programming

Another approach would be to use a linear programming, but something is off as my timings are far greater than those reported here (second answer, which I used as a starting point for this).

import numpy as np

from scipy.optimize import linprog

import time

def vectorizable(point, simplexT, coeffs):

b = np.r_[point, np.ones(1)]

lp = linprog(coeffs, A_eq = simplexT, b_eq = b)

return lp.success

dims = 2

rng = np.random.default_rng()

test_points = rng.random((10, dims))*10

simplex = np.array([[0, 0,], [8, 8,], [10, 3]])

coeffs = np.zeros(len(simplex))

simplex_T = np.r_[simplex.T,np.ones((1,len(simplex)))]

start_time = time.time()

in_simplex = np.vectorize(vectorizable,

excluded={1, 2},

signature="(n) -> ()")(test_points, simplex_T, coeffs)

print(f"----- {time.time() - start_time} seconds -----")

polygon = np.concatenate([simplex, np.array([simplex[0]])])

print()

plt.plot(*polygon.T)

plt.scatter(*test_points.T)

for i, p in enumerate(test_points, 0):

print(f"{i}\t{p}\t{in_simplex[i]}")

plt.annotate(i, p)

This time, I get the desired result:

----- 0.019016504287719727 seconds -----

0 [5.90479358 5.75174668] True

1 [0.51156474 0.86088186] False

2 [9.22371526 4.025967 ] True

3 [9.35307399 5.38630723] False

4 [2.83575442 5.66318545] False

5 [7.89786072 6.06068206] True

6 [0.09838826 1.38358132] False

7 [3.19776368 9.73562359] False

8 [9.9122709 0.76862067] False

9 [4.52352281 6.2259428 ] False

For 10, 100 and 1,000 points, the timings are more or less in the same order of magnitude. However, when I jump to 10,000 points, I'm suddenly looking at anywhere between 4 and 8 seconds, which is far too slow, and only increases into tens of seconds and minutes when I increase dimensionality.

As mentioned, I would like to avoid parallelisation where possible. Any help/advice regarding the barycentric part would be greatly appreciated, particularly if, if it could work, would be faster than the linear programming approach. And is there any way to accelerate the LP method?

Thanks