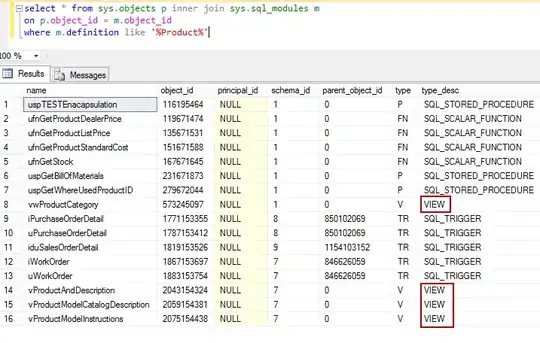

This is little data to draw correlations, of course, but here would be a method:

Start with pivoting your data as a time series, then normalize activity by agent and week, finally draw correlations between activity timelines.

Reshape data as time series

import pandas as pd

timeline = pd.pivot_table(data = df,

columns = ['Influencer'],

index = ['Week']).fillna(value=0)

timeline

Conversions

Influencer Michi Stacey Tim Tom

Week

1 0.0 0.0 2.0 3.0

2 0.0 2.0 0.0 5.0

3 6.0 3.0 5.0 0.0

4 5.0 0.0 0.0 2.0

It is important to fill missing values with zeroes while pivoting. Otherwise (i.e. leaving them as NaNs), you run the risk that further calculations will ignore weeks of total inactivity. Indeed, ignoring NaN is the default behaviour of .mean() or .std() in the next section.

Normalize the activity of each agent

Timeline, normalized:

TN = (timeline-timeline.mean())/timeline.std()

TN

Conversions

Influencer Michi Stacey Tim Tom

Week

1 -0.858956 -0.833333 0.105802 0.240192

2 -0.858956 0.500000 -0.740613 1.200961

3 1.015129 1.166667 1.375424 -1.200961

4 0.702782 -0.833333 -0.740613 -0.240192

More on this: Normalize columns of a dataframe

Calculate correlations

Numerical analysis

Correlations between timelines:

TN[conv].corr()

Influencer Michi Stacey Tim Tom

Influencer

Michi 1.000000 0.294995 0.473673 -0.875274

Stacey 0.294995 1.000000 0.587788 -0.266880

Tim 0.473673 0.587788 1.000000 -0.779325

Tom -0.875274 -0.266880 -0.779325 1.000000

From which we draw that, for example,

- Michi seems to have an awful interaction with Tom (-0.88). Indeed, Mitchi tends to not deliver anything while Tom is active, and vice-versa.

- As far as teamwork goes, the Tim & Stacey pair culminates at 0.59. Indeed they have their production peak the same week (week 3)

Graphical analysis

Alternatively, using e.g. seaborn pairplot:

import seaborn as sns

sns.pairplot(TN[conv],kind='kde')

An upward trend signifies possible teamwork, while a downwards trend means the pair does not seem to get along well.

To go further: beyond-pairwise correlations, provided the total dataset is large enough.