I'm trying to do 3D reconstruction from 2 images with OpenCV. Based on various info found here on Stack Overflow and elsewhere, I've got a program that runs (i.e. does not crash) and produces 3D reconstructed points, but the results are atrocious.

I'm using 2 stereo frames from the Argoverse 1 dataset, in particular from one of the scenes in Miami with various palm trees and cars on the side of the road so there are many good keypoint matching opportunities, and I think I've got the keypoint matching part down well. Then, I call the OpenCV functions findEssentialMat, recoverPose, and triangulatePoints in succession.

Here is my code currently:

# main.py

import os

import numpy as np

import cv2

from typing import List

from termcolor import colored

import plotly.graph_objects as PlotlyGraphObjects

# load left and right stereo images from Argoverse 1 train data,

# trip ID 3d20ae25-5b29-320d-8bae-f03e9dc177b9

IMAGE_1_LOC = os.path.join(os.getcwd(), 'stereo_front_left_315975023072863736.jpg')

IMAGE_2_LOC = os.path.join(os.getcwd(), 'stereo_front_right_315975023072863488.jpg')

LIDAR_POINTS_FILE_LOC = os.path.join(os.getcwd(), 'PC_315975023020283000.npy')

SHOW_PLOTLY_MOUSEOVERS = False

def main():

print('\n' + 'starting . . .' + '\n')

# suppress numpy printing in scientific notation

np.set_printoptions(suppress=True)

# # pretty print the calibration data for the Argoverse trip ID mentioned above

# with open(os.path.join(os.getcwd(), 'vehicle_calibration_info.json'), 'r') as f:

# calib_data = json.load(f)

# # end with

# print('\n' + 'calib_data: ')

# print(json.dumps(calib_data, indent=4))

# read in the 2 stereo images

image_1 = cv2.imread(IMAGE_1_LOC)

image_2 = cv2.imread(IMAGE_2_LOC)

# get the heights and widths

image_1_height, image_1_width = image_1.shape[:2]

image_2_height, image_2_width = image_2.shape[:2]

# verify the image heights and widths are equal

assert image_1_height == image_2_height

assert image_1_width == image_2_width

image_height = image_1_height

image_width = image_1_width

# verify the heights and widths are non-zero

assert image_height > 0

assert image_width > 0

matching_kps_1, matching_kps_2 = find_matching_keypoints(image_1, image_2)

# matching_kps_1: np.ndarray, shape=(n, 2), np.float64

# matching_kps_2: np.ndarray, shape=(n, 2), np.float64

assert len(matching_kps_1) == len(matching_kps_2)

print('\n' + 'length of matching keypoints = ' + str(len(matching_kps_1)))

# draw keypoints on images and show

image_1_with_kps = image_1.copy()

for kp in matching_kps_1:

center = (int(kp[0]), int(kp[1]))

cv2.circle(image_1_with_kps, center=center, radius=5, color=(255.0, 0.0, 255.0), thickness=-1)

# end for

image_2_with_kps = image_2.copy()

for kp in matching_kps_2:

center = (int(kp[0]), int(kp[1]))

cv2.circle(image_2_with_kps, center=center, radius=5, color=(255.0, 0.0, 255.0), thickness=-1)

# end for

cv2.imshow('image_1_with_kps', image_1_with_kps)

cv2.imshow('image_2_with_kps', image_2_with_kps)

# from the Argoverse vehicle_calibration_info.json file

focal_len = 2360

# Formula for intrinsic camera matrix, often known as K:

# fx => focal length, x dimension

# fy => focal length, y dimension

# s => axis skew

# x0 => principal point offset x

# y0 => principal point offset y

# -- --

# | fx s x0 |

# K = | 0 fy y0 |

# | 0 0 1 |

# -- --

# For more explanation see http://ksimek.github.io/2013/08/13/intrinsic/

# We can make some assumptions for the benefit of simplification.

# Supposing the camera is at least reasonably well made, fx == fy == focal length,

# and also s is close enough to zero that using 0 in practice will usually be ok.

# x0 is 1/2 the width and y0 is 1/2 the height

intrin_cam_mtx = np.array([[focal_len, 0 , image_width // 2 ],

[ 0 , focal_len, image_height // 2],

[ 0 , 0 , 1 ]], dtype=np.float32)

# calculate essential matrix

# to set up for dot product, transpose matching keypoints and add a row of 1s (homogeneous coordinates)

matching_kps_1 = matching_kps_1.T

row_of_1s_1 = np.ones(shape=matching_kps_1.shape[1], dtype=np.float32)

matching_kps_1 = np.vstack((matching_kps_1, row_of_1s_1), dtype=np.float32)

# (3, n)

matching_kps_2 = matching_kps_2.T

row_of_1s_2 = np.ones(shape=matching_kps_2.shape[1], dtype=np.float32)

matching_kps_2 = np.vstack((matching_kps_2, row_of_1s_2), dtype=np.float32)

# (3, n)

# normalize the matching keypoints

matching_kps_1_norm = np.dot(np.linalg.inv(intrin_cam_mtx), matching_kps_1)

matching_kps_2_norm = np.dot(np.linalg.inv(intrin_cam_mtx), matching_kps_2)

# return normalized matching keypoints to (n, 2) shape

matching_kps_1_norm = matching_kps_1_norm[0:2, :].T

matching_kps_2_norm = matching_kps_2_norm[0:2, :].T

# for the next steps ref:

# https://stackoverflow.com/questions/33906111/how-do-i-estimate-positions-of-two-cameras-in-opencv

essential_mtx, mask = cv2.findEssentialMat(matching_kps_1_norm, matching_kps_2_norm, intrin_cam_mtx)

print('\n' + 'essential_mtx: ')

print(essential_mtx)

# make the camera 1 extrinsic matrix (eye), i.e. use cam 1 as the reference)

cam_1_extrin_mtx = np.eye(4, dtype=np.float32)

print('\n' + 'cam_1_extrin_mtx: ')

print(cam_1_extrin_mtx)

# use the essential matrix and matching keypoints to get the cam 2 rotation and translation matrices

retval, cam_2_rot_mtx, cam_2_transpose_mtx, mask = cv2.recoverPose(essential_mtx, matching_kps_1_norm, matching_kps_2_norm)

print('\n' + 'cam_2_rot_mtx: ')

print(cam_2_rot_mtx)

print('\n' + 'cam_2_transpose_mtx: ')

print(cam_2_transpose_mtx)

# now we can make the camera 2 extrinsic matrix

cam_2_extrin_mtx = np.eye(4)

cam_2_extrin_mtx[0:3, 0:3] = cam_2_rot_mtx

cam_2_extrin_mtx[0:3, 3:4] = cam_2_transpose_mtx

print('\n' + 'cam_2_extrin_mtx: ')

print(cam_2_extrin_mtx)

# dot product the intrinsic cam mtx with each camera's extrinsic cam mtx to get each camera's projection mtx

cam_1_proj_mtx = np.dot(intrin_cam_mtx, cam_1_extrin_mtx[0:3, :])

cam_2_proj_mtx = np.dot(intrin_cam_mtx, cam_2_extrin_mtx[0:3, :])

# for matching keypoints, remove the extra row of 1s, and transpose (2, n) -> (n, 2)

matching_kps_1 = matching_kps_1[0:2, :].T

matching_kps_2 = matching_kps_2[0:2, :].T

# finally we can triangulate points, OpenCV returns the points in 4d homogeneous coordinates form

points_4d_hom = cv2.triangulatePoints(cam_1_proj_mtx, cam_2_proj_mtx,

np.expand_dims(matching_kps_1_norm, axis=1),

np.expand_dims(matching_kps_2_norm, axis=1)).T

print('\n' + 'points_4d_hom: ')

print(points_4d_hom.shape)

print(points_4d_hom.dtype)

print(points_4d_hom)

# in each row, divide all row values by the 4th column (the homogeneous value)

# this will give make each row (x, y, z, 1.0)

points_4d_hom /= points_4d_hom[:, 3:]

# now remove the 4th column since it's always 1.0 now

points_3d = points_4d_hom[:, 0:3]

print('\n' + 'points_3d: ')

print(points_3d.shape)

print(points_3d.dtype)

print(points_3d)

# throw out points that are way out of range

points_3d = points_3d[(points_3d[:, 0] > -200) & (points_3d[:, 0] < 200) & \

(points_3d[:, 1] > -200) & (points_3d[:, 1] < 200) & \

(points_3d[:, 2] > -10) & (points_3d[:, 2] < 50)]

print('\n' + 'points_3d: ')

print(points_3d.shape)

print(points_3d.dtype)

print(points_3d)

### load lidar points for comparison #####################################$

lidarPoints = np.load(LIDAR_POINTS_FILE_LOC)

### visualize 3d reconstructed points vs lidar points #####################

s3dLidarPoints = PlotlyGraphObjects.Scatter3d(x=lidarPoints[:, 0], y=lidarPoints[:, 1], z=lidarPoints[:, 2],

mode='markers', marker={'size': 1, 'color': 'rgb(0,0,255)'})

if len(points_3d) > 0:

s3dReconPoints = PlotlyGraphObjects.Scatter3d(x=points_3d[:, 0], y=points_3d[:, 1], z=points_3d[:, 2],

mode='markers', marker={'size': 3, 'color': 'rgb(255,0,0)'})

# make a plotly Figure object

if len(points_3d) > 0:

plotlyFig = PlotlyGraphObjects.Figure(data=[s3dLidarPoints, s3dReconPoints])

else:

plotlyFig = PlotlyGraphObjects.Figure(data=[s3dLidarPoints])

# end if

plotlyFig.update_layout(scene_aspectmode='data')

if not SHOW_PLOTLY_MOUSEOVERS:

plotlyFig.update_layout(hovermode=False)

plotlyFig.update_layout(scene=dict(xaxis_showspikes=False,

yaxis_showspikes=False,

zaxis_showspikes=False))

# end if

# show the plotly Figure object

plotlyFig.show()

# hold OpenCV windows open

cv2.waitKey()

print('\n' + 'done !!' + '\n')

# end function

def find_matching_keypoints(image_1, image_2):

open_cv_kps_1, kps_2d_1, descriptors_1 = extract_features(image_1)

open_cv_kps_2, kps_2d_2, descriptors_2 = extract_features(image_2)

bf_matcher = cv2.BFMatcher(cv2.NORM_HAMMING)

matches = bf_matcher.knnMatch(descriptors_1, descriptors_2, k=2)

# matches is a tuple of tuples of type DMatch, ex:

# ((<DMatch 0x7ff9b84a52f0>, <DMatch 0x7ff9b84a5030>),

# (<DMatch 0x7ff9b84a52b0>, <DMatch 0x7ff9b84a53b0>),

# .

# .

# (<DMatch 0x7ff9b84a5530>, <DMatch 0x7ff9b84a5550>))

open_cv_matching_kps_1 = []

open_cv_matching_kps_2 = []

matching_kps_2d_1 = []

matching_kps_2d_2 = []

matching_kp_idxs_1 = []

matching_kp_idxs_2 = []

# these are to make this function more efficient and do not need to be returned

matching_kp_idxs_1_set = set()

matching_kp_idxs_2_set = set()

d_matches_1 = []

d_matches_2 = []

# ratio test

for d_match_1, d_match_2 in matches:

if d_match_1.distance < 0.75 * d_match_2.distance:

open_cv_kp_1 = open_cv_kps_1[d_match_1.queryIdx]

open_cv_kp_2 = open_cv_kps_2[d_match_1.trainIdx]

kp_2d_1 = kps_2d_1[d_match_1.queryIdx]

kp_2d_2 = kps_2d_2[d_match_1.trainIdx]

if d_match_1.distance < 32:

if d_match_1.queryIdx not in matching_kp_idxs_1_set and d_match_1.trainIdx not in matching_kp_idxs_2_set:

# we found a match

matching_kp_idxs_1.append(d_match_1.queryIdx)

matching_kp_idxs_2.append(d_match_1.trainIdx)

matching_kp_idxs_1_set.add(d_match_1.queryIdx)

matching_kp_idxs_2_set.add(d_match_1.trainIdx)

open_cv_matching_kps_1.append(open_cv_kp_1)

open_cv_matching_kps_2.append(open_cv_kp_2)

matching_kps_2d_1.append(kp_2d_1)

matching_kps_2d_2.append(kp_2d_2)

d_matches_1.append(d_match_1)

d_matches_2.append(d_match_2)

# end if

# end if

# end if

# end for

# throw error if there are duplicates in either list

assert len(set(matching_kp_idxs_1)) == len(matching_kp_idxs_1)

assert len(set(matching_kp_idxs_2)) == len(matching_kp_idxs_1)

assert len(matching_kps_2d_1) >= 8

matching_kps2d_1 = np.array(matching_kps_2d_1, dtype=np.float64)

matching_kps2d_2 = np.array(matching_kps_2d_2, dtype=np.float64)

return matching_kps2d_1, matching_kps2d_2

# end function

def extract_features(image: np.ndarray):

orb = cv2.ORB_create()

pts: np.ndarray = cv2.goodFeaturesToTrack(np.mean(image, axis=2).astype(np.uint8),

3000, qualityLevel=0.01, minDistance=7)

# (n, 1, 2)

open_cv_kps: List[cv2.KeyPoint] = []

for pt in pts:

keypoint = cv2.KeyPoint(x=pt[0][0], y=pt[0][1], size=20)

open_cv_kps.append(keypoint)

# end for

open_cv_kps, descriptors = orb.compute(image, open_cv_kps)

kp_list = []

for kp in open_cv_kps:

kp_list.append((kp.pt[0], kp.pt[1]))

# end for

kp_np_arr = np.array(kp_list)

return open_cv_kps, kp_np_arr, descriptors

# end function

if __name__ == '__main__':

main()

If anybody wants to run this to verify the results, all the files are shared on a directory on my Google Drive:

https://drive.google.com/drive/folders/1uFX8_LFdi8jN0zIPpmwFBtKrb7ApkENk?usp=sharing

The files are:

main.py (above code)

PC_315975023020283000.npy (NumPy version of the point cloud from the lidar captured at the same time as the images)

stereo_front_left_315975023072863736.jpg (left image)

stereo_front_right_315975023072863488.jpg (right image)

vehicle_calibration_info.json (cal info for the vehicle sensors, including the intrinsic and extrinsic camera matrices)

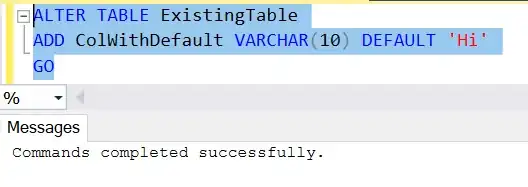

Here are the matching keypoints on the 2 images:

There are ~400 matching keypoints and they seem pretty good to me. I had to scale down this image to make the Stack Overflow size limit, but if you run the program you'll see the keypoints on the full size images.

Here is a 3D visualization in Plotly showing the point cloud captured from the lidar (blue) and the 3D reconstructed points (red). As you can the there are very few 3D reconstructed points and they are way above the ground:

If you run the program this visualization will appear in your browser courtesy of Plotly.

Here is the terminal output currently:

starting . . .

length of matching keypoints = 405

essential_mtx:

[[ 0.08616509 -0.59005992 -0.21282769]

[-0.59909863 0.13506316 -0.24201413]

[-0.21501855 -0.26115559 -0.2212283 ]]

cam_1_extrin_mtx:

[[1. 0. 0. 0.]

[0. 1. 0. 0.]

[0. 0. 1. 0.]

[0. 0. 0. 1.]]

cam_2_rot_mtx:

[[ 0.61803748 -0.1853135 0.76399514]

[-0.18531167 -0.97877629 -0.08750174]

[ 0.76399559 -0.08749786 -0.63926119]]

cam_2_transpose_mtx:

[[-0.44521735]

[-0.35851899]

[ 0.82051547]]

cam_2_extrin_mtx:

[[ 0.61803748 -0.1853135 0.76399514 -0.44521735]

[-0.18531167 -0.97877629 -0.08750174 -0.35851899]

[ 0.76399559 -0.08749786 -0.63926119 0.82051547]

[ 0. 0. 0. 1. ]]

points_4d_hom:

(405, 4)

float32

[[ 0.43175203 0.3602345 -0.82691205 0.00614425]

[ 0.43174988 0.3602345 -0.8269171 0.00559337]

[ 0.43174294 0.36027348 -0.82690895 0.00475809]

...

[ 0.43173385 0.3602081 -0.82695377 0.00186889]

[ 0.4317098 0.360238 -0.82695407 -0.0014882 ]

[ 0.43171775 0.36024278 -0.8269491 -0.00027003]]

points_3d:

(405, 3)

float32

[[ 70.26933 58.629574 -134.58318 ]

[ 77.18953 64.4038 -147.83871 ]

[ 90.73868 75.71806 -173.79004 ]

...

[ 231.01048 192.73875 -442.48322 ]

[ -290.0891 -242.06335 555.6751 ]

[-1598.7802 -1334.0869 3062.4402 ]]

points_3d:

(24, 3)

float32

[[-20.482553 -17.09517 39.251465]

[-23.504696 -19.61653 45.040474]

[-22.470123 -18.753183 43.05873 ]

[-22.743721 -18.982162 43.582657]

[-19.973356 -16.67244 38.274963]

[-23.053265 -19.239862 44.175686]

[-25.736473 -21.479134 49.31516 ]

[-21.752113 -18.154146 41.683395]

[-25.7492 -21.489681 49.33955 ]

[-20.714745 -17.290146 39.69537 ]

[-19.971823 -16.670094 38.272877]

[-22.189672 -18.518356 42.521767]

[-20.730484 -17.303225 39.725533]

[-23.032377 -19.223827 44.13475 ]

[-21.316837 -17.797058 40.847626]

[-22.140318 -18.47954 42.426025]

[-21.923832 -18.297747 42.012283]

[-23.047565 -19.235023 44.164856]

[-20.289022 -16.93954 38.87886 ]

[-24.983194 -20.85096 47.872143]

[-21.97033 -18.336681 42.10128 ]

[-22.640509 -18.894253 43.385387]

[-22.261568 -18.5793 42.659206]

[-22.186766 -18.518261 42.51349 ]]

done !!

I've been over the documentation of findEssentialMat, recoverPose, and triangulatePoints and all the examples I can find and as far as I can tell I'm doing everything right.

Any suggestions as to how to improve performance or what to check next?