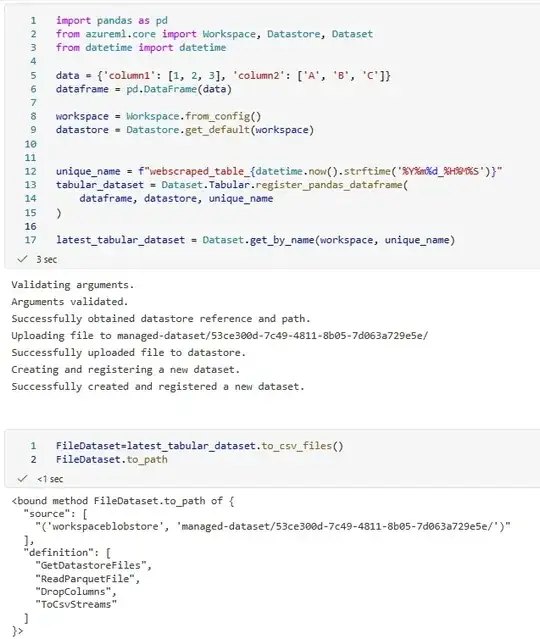

I've written a webscraping script to extract a web table in a dataframe format which I have converted into a TabularDataset using Dataset.Tabular.register_pandas_dataframe() to store in the default datastore.

I want to pass this webscraped table as a side_input to ParallelRunStep() in a batch inferencing pipeline but in order to achieve that, the side_input should be in FileDataset type.

The established way that has worked so far to convert TabularDataset into FileDataset was using

side_input = Dataset.File.from_files(path="/path/to/file/on/datastore")

The above method worked for uploaded csv files in the datastore. But thing with registering a pandas dataframe in the datastore is that with each run of the webscraping script occurs a registration of pandas dataframe in the datastore to convert it into a TabularDataset and the relative path in the datastore changes.

Hardcoding the relative path works but since the web table data changes periodically, I want the latest data from the web table to be used aas a side_input

My questions:

- how to convert pandas dataframe into

FileDataseteg: to_FileDataset if it even exists? - how to convert

TabularDatsetintoFileDataset? - if there is any way to find the relative path of the registered tabular dataset in the datastore using azureml python sdk v2?

side note: the metadata of tabular dataset shows a json info something like this

>>> tabular_ds

{

"source": [("default_datastore", "relative/path/to/the/tabular/dataset")],

.

.

.

}

I was wondering if I can extract the source key from this like I can extract the ws.name after initializing ws = Workspace.from_config() . Just thinking out loud.