As can be seen from the doc, the function kmodes() expects categorical data.

Let's try to understand from the following data sample, what SW (within cluster variance) may mean in this case.

Since simple Euclidean or Manhattan distance don't work for categorical variables (at least not when they are one-hot-encoded), the klaR implementation uses simple matching distance to find SW.

Let's start with the following dataset:

set.seed(1)

x <- rbind(matrix(rbinom(100, 2, 0.25), ncol = 2),

matrix(rbinom(100, 2, 0.75), ncol = 2))

colnames(x) <- c("a", "b")

head(x)

# a b

# [1,] 0 0

# [2,] 0 1

# [3,] 1 0

# [4,] 1 0

# [5,] 0 0

# [6,] 1 0

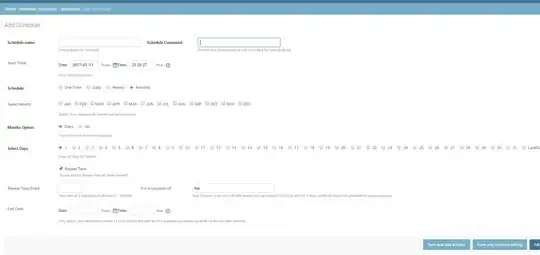

plot(jitter(x), col = cl$cluster, pch=19, main=paste(k, 'clusters'))

This dataset can be thought of as a categorical dataset, since each column contains one of three values (categories) 0,1,2, hence represents categorical variable.

As can be seen from above, this toy dataset clearly contains 9 clusters. So let's try to create 9 clusters with kmodes algorithm:

k <- 9

(cl <- kmodes(x, k))

# K-modes clustering with 9 clusters of sizes 18, 12, 8, 1, 11, 14, 18, 15, 3

# Cluster modes:

# a b

#1 0 0

#2 1 0

#3 0 1

#4 2 0

#5 1 2

#6 2 2

#7 1 1

#8 2 1

#9 0 2

# Clustering vector:

# 1 3 2 2 1 2 4 2 7 1 3 1 2 1 7 1 2 8 1 7 2 3 2 1 1 3 3 1 7 9 1 7 1 1 7 2 7 1

# 2 1 2 2 7 3 3 7 1 1 7 7 7 6 6 9 5 6 6 6 5 5 9 7 6 8 8 6 5 6 8 5 3 8 8 6 5

# 8 8 8 8 7 5 6 8 5 7 5 8 8 1 6 7 5 6 8 5 8 6 7 6 7

# Within cluster simple-matching distance by cluster:

# [1] 0 0 0 0 0 0 0 0 0

plot(jitter(x), col = cl$cluster, pch=19, main=paste(k, 'clusters'))

As can be seen from above, this produces perfect clustering, since Within cluster simple-matching distance for all the clusters are 0.

Now, let's try with k=5

k <- 5

(cl <- kmodes(x, k))

# K-modes clustering with 5 clusters of sizes 24, 17, 27, 17, 15

# Cluster modes:

# a b

#1 0 0

#2 1 0

#3 1 1

#4 2 1

#5 2 2

# Clustering vector:

# 1 3 2 2 1 2 4 2 3 1 1 1 2 1 3 1 2 4 1 3 2 4 2 1 1 3 1 1 3 1 1 3 1 1 3 2 3 1 2

# 1 2 2 3 3 1 3 1 1 3 3 3 5 5 1 3 5 5 5 2 3 5 3 5 4 4 5 3 5 4 3 1 4 4 5 2

# 4 4 4 4 3 3 5 4 2 3 2 4 4 1 5 3 3 5 4 2 4 5 3 5 3

# Within cluster simple-matching distance by cluster:

# 6 5 9 2 1

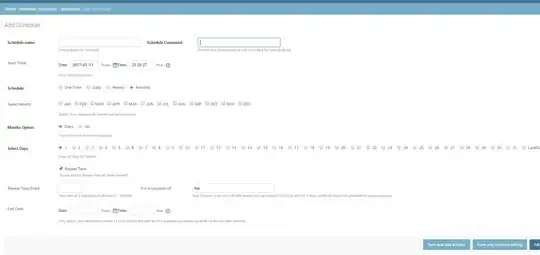

plot(jitter(x), col = cl$cluster, pch=19, main=paste(k, 'clusters'))

Notice the Within cluster simple-matching distance this time. Now let's find the points that are grouped into cluster 3, e.g., with the corresponding mode for the cluster 3 which is (1,1).

x[cl$cluster == 3,]

a b

[1,] 0 1 # mismatch in 1 position with (1,1)

[2,] 1 1

[3,] 1 1

[4,] 1 1

[5,] 0 1 # mismatch in 1 position with (1,1)

[6,] 1 1

[7,] 1 1

[8,] 1 1

[9,] 1 1

[10,] 1 1

[11,] 0 1 # mismatch in 1 position with (1,1)

[12,] 1 1

[13,] 1 1

[14,] 1 1

[15,] 1 1

[16,] 1 2 # mismatch in 1 position with (1,1)

[17,] 1 2 # mismatch in 1 position with (1,1)

[18,] 1 1

[19,] 1 2 # mismatch in 1 position with (1,1)

[20,] 1 2 # mismatch in 1 position with (1,1)

[21,] 1 1

[22,] 1 2 # mismatch in 1 position with (1,1)

[23,] 1 1

[24,] 1 1

[25,] 1 2 # mismatch in 1 position with (1,1)

[26,] 1 1

[27,] 1 1

Hence, there is a mismatch in 9 positions for cluster 3 that is also reported in Within cluster simple-matching distance above, this can be thought of SW.

The sum of SW. i.e., SSW for all such intra-cluster distances can be computed by summing these numbers across all the clusters. As we increase the number of clusters, SSW is supposed to decrease. This SSW itself can be used as a cluster validation metric.

For example, let's try to plot how SSW varies with cluster number k:

num_clusters <- 2:9

SSW <- c()

for (k in num_clusters) {

cl <- kmodes(x, k)

SSW <- c(SSW, sum(cl$withindiff))

}

plot(num_clusters, SSW, pch=19, col='red', main='SW vs. #clusters')

lines(num_clusters, SSW)

We can compute the between class variance SSB in similar manner and compute the ratio.

An alternative approach could be to use one-hot-encoding (OHE) for the categorcial values and use Euclidian distance to compute SSW and SSB, using the formulae from here and compute SSW/SSB for validation.