For a 2 node network, I've written an agent named "sensor" which sends a datagram req from node A to node B continuously for 5 mintues with a delay of 10 seconds between every datagram. After simulation, with the generated trace.json file i am trying to plot the graph for the rtt of each datagram.

import json as js

import matplotlib.pyplot as plt

f = open('trace1.json',"r")

data = f.read()

obj = js.loads(data)

list1 = obj['events']

flag = False

bias = 0

for j in range(len(list1)):

eventobj = list1[j]

list2 = eventobj['events']

Dict = {}

timeList = []

T = 0

R = 0

for i in range(len(list2)):

if(flag == False):

bias = list2[0].get('time')

st = list2[i].get("stimulus")

if(st['sender'] == 'phy' and st['recipient'] == 'phy'):

if st['messageID'] in Dict:

if "response" in list2[i]:

res = list2[i].get("response")

if(res['clazz'] != 'org.arl.unet.phy.BadFrameNtf' and res['clazz'] == 'org.arl.unet.phy.RxFrameNtf'):

time1 = Dict[st['messageID']]

time2 = list2[i].get("time")

diff = abs(time1 - time2)

timeList.append([(time1 - bias)/1000,diff/1000])

R = R+1

else:

if "response" in list2[i]:

res = list2[i].get("response")

if (res['clazz'] == 'org.arl.unet.phy.TxFrameNtf' and st['clazz'] == 'org.arl.unet.sim.HalfDuplexModem$TX'):

Dict[st['messageID']]=(list2[i].get("time"))

T = T+1

print(timeList)

x = []

y = []

for i in timeList:

x.append(i[0])

y.append(i[1])

plt.plot(x,y)

plt.xlim(0,400)

plt.ylim(0.8,1.2)

plt.xlabel('Timeline in seconds')

plt.ylabel('delay in seconds')

plt.show()

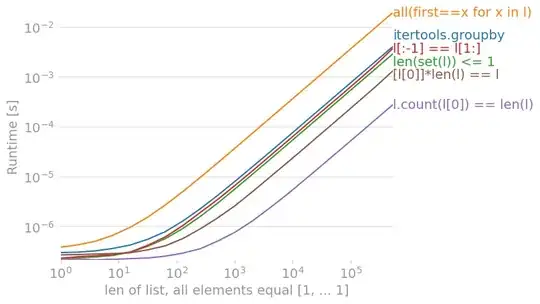

And the graph looks like this:

By observing the graph, I am not able to understand that within in a simple 2 node network why there such delay variation can be seen for every alternate datagram?(And why for every alternate datagram the delay is less?)