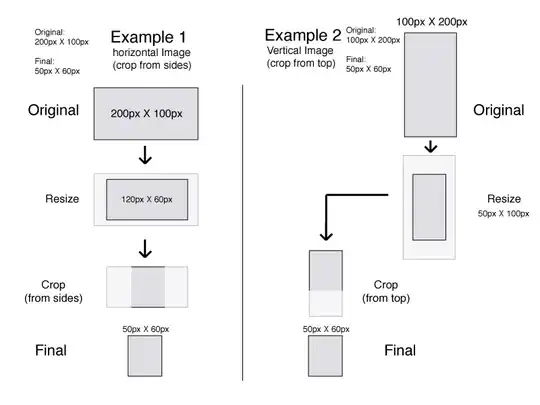

I have the following sample image:

where I am trying to locate the coords of the four corners of the inner scanned map image like:

i have tried with something like this:

import cv2

import numpy as np

# Load the image

image = cv2.imread('sample.jpg')

# Convert to grayscale

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

# Apply Gaussian blur

blurred = cv2.GaussianBlur(gray, (5, 5), 0)

# Perform edge detection

edges = cv2.Canny(blurred, 50, 150)

# Find contours in the edge-detected image

contours, _ = cv2.findContours(edges.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

# Iterate through the contours and filter for squares or rectangles for contour in contours:

perimeter = cv2.arcLength(contour, True)

approx = cv2.approxPolyDP(contour, 0.04 * perimeter, True)

if len(approx) == 4:

x, y, w, h = cv2.boundingRect(approx)

aspect_ratio = float(w) / h

# Adjust this threshold as needed

if aspect_ratio >= 0.9 and aspect_ratio <= 1.1:

cv2.drawContours(image, [approx], 0, (0, 255, 0), 2)

# Display the image with detected squares/rectangles

cv2.imwrite('detectedy.png', image)

but all i get is something like this:

UPDATE:

so i have found this code that should do what i require:

import cv2

import numpy as np

from subprocess import call

file = "samplebad.jpg"

img = cv2.imread(file)

orig = img.copy()

# sharpen the image (weighted subtract gaussian blur from original)

'''

https://stackoverflow.com/questions/4993082/how-to-sharpen-an-image-in-opencv

larger smoothing kernel = more smoothing

'''

blur = cv2.GaussianBlur(img, (9,9), 0)

sharp = cv2.addWeighted(img, 1.5, blur, -0.5, 0)

# convert the image to grayscale

# gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

gray = cv2.cvtColor(sharp, cv2.COLOR_BGR2GRAY)

# smooth whilst keeping edges sharp

'''

(11) Filter size: Large filters (d > 5) are very slow, so it is recommended to use d=5 for real-time applications, and perhaps d=9 for offline applications that need heavy noise filtering.

(17, 17) Sigma values: For simplicity, you can set the 2 sigma values to be the same. If they are small (< 10), the filter will not have much effect, whereas if they are large (> 150), they will have a very strong effect, making the image look "cartoonish".

These values give the best results based upon the sample images

'''

gray = cv2.bilateralFilter(gray, 11, 17, 17)

# detect edges

'''

(100, 200) Any edges with intensity gradient more than maxVal are sure to be edges and those below minVal are sure to be non-edges, so discarded. Those who lie between these two thresholds are classified edges or non-edges based on their connectivity. If they are connected to "sure-edge" pixels, they are considered to be part of edges.

'''

edged = cv2.Canny(gray, 100, 200, apertureSize=3, L2gradient=True)

cv2.imwrite('./edges.jpg', edged)

# dilate edges to make them more prominent

kernel = np.ones((3,3),np.uint8)

edged = cv2.dilate(edged, kernel, iterations=1)

cv2.imwrite('edges2.jpg', edged)

# find contours in the edged image, keep only the largest ones, and initialize our screen contour

cnts, hierarchy = cv2.findContours(edged.copy(), cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

cnts = sorted(cnts, key=cv2.contourArea, reverse=True)[:10]

screenCnt = None

# loop over our contours

for c in cnts:

# approximate the contour

peri = cv2.arcLength(c, True)

approx = cv2.approxPolyDP(c, 0.02 * peri, True)

# if our approximated contour has four points, then we can assume that we have found our screen

if len(approx) > 0:

screenCnt = approx

print(screenCnt)

cv2.drawContours(img, [screenCnt], -1, (0, 255, 0), 10)

cv2.imwrite('contours.jpg', img)

break

# reshaping contour and initialise output rectangle in top-left, top-right, bottom-right and bottom-left order

pts = screenCnt.reshape(4, 2)

rect = np.zeros((4, 2), dtype = "float32")

# the top-left point has the smallest sum whereas the bottom-right has the largest sum

s = pts.sum(axis = 1)

rect[0] = pts[np.argmin(s)]

rect[2] = pts[np.argmax(s)]

# the top-right will have the minumum difference and the bottom-left will have the maximum difference

diff = np.diff(pts, axis = 1)

rect[1] = pts[np.argmin(diff)]

rect[3] = pts[np.argmax(diff)]

# compute the width and height of our new image

(tl, tr, br, bl) = rect

widthA = np.sqrt(((br[0] - bl[0]) ** 2) + ((br[1] - bl[1]) ** 2))

widthB = np.sqrt(((tr[0] - tl[0]) ** 2) + ((tr[1] - tl[1]) ** 2))

heightA = np.sqrt(((tr[0] - br[0]) ** 2) + ((tr[1] - br[1]) ** 2))

heightB = np.sqrt(((tl[0] - bl[0]) ** 2) + ((tl[1] - bl[1]) ** 2))

# take the maximum of the width and height values to reach our final dimensions

maxWidth = max(int(widthA), int(widthB))

maxHeight = max(int(heightA), int(heightB))

# construct our destination points which will be used to map the screen to a top-down, "birds eye" view

dst = np.array([

[0, 0],

[maxWidth - 1, 0],

[maxWidth - 1, maxHeight - 1],

[0, maxHeight - 1]], dtype = "float32")

# calculate the perspective transform matrix and warp the perspective to grab the screen

M = cv2.getPerspectiveTransform(rect, dst)

warp = cv2.warpPerspective(orig, M, (maxWidth, maxHeight))

# cv2.imwrite('./cvCropped/frame/' + file, warp)

# crop border off (85px is empirical)

# cropBuffer = 85 # this is for the old (phone) images

cropBuffer = 105 # this is for those taken by Nick

height, width = warp.shape[:2]

cropped = warp[cropBuffer:height-cropBuffer, cropBuffer:width-cropBuffer]

# output the result

cv2.imwrite('cropped.jpg', cropped)

but because these old scanned maps have a fold in them it fails and only detects one side like:

is there a way to somehow get opencv to ignore the center region?