I've come across a website that seems to resist being read via HTTP/Get with C# but works fine in Firefox, Postman and Powershell and I would really love to understand why.

Here is the repro-case in powershell

Invoke-WebRequest -UseBasicParsing -Uri https://www.tripadvisor.de/

This call works, it gets a 200 and powershell dumps the result.

When I do the same in C#, the request hangs until it times out, I'll never get a response.

Here is the c# code. It is not that complicated

var client = new HttpClient();

var r = await client.GetAsync("https://www.tripadvisor.de/");

r.EnsureSuccessStatusCode();

In a .net6.0 application with top level statements this is actually the entire program code. I've tested this in a .net framework 4.8 console app (of course with an async Task main), same behaviour.

Tested on Windows 11 and Windows 10.

Can somebody reproduce this behaviour?

P.S.: I am sure this is not an async/await or deadlock issue, because plenty of other sites do work correctly

**Edit: ** Working hypothesis is that the server simply ignores those request by some metric because it assumes that this request come from an invalid client (non-browser, scraper, flooder).

What I have also tried

I've been trying to make this C# code work by passing a HttpClientHandler with Tls1.2

using var handler = new HttpClientHandler() { SslProtocols = SslProtocols.Tls12 };

var client = new HttpClient(handler);

Same result.

I've been sending ALL the additional headers, that for example firefox sends (User-Agent, Accept, Accept-Encoding, etc), no luck.

I've added a certificate callback to the handler just in case, but sslPolicyErrors had no errors anyway:

handler.ServerCertificateCustomValidationCallback = (message, cert, chain, sslPolicyErrors) => true;

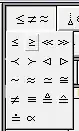

The parallel stack shows, that the code tries to receive a full TLS frame (I am just guessing)

Can somebody test the basic C# code and validate the behaviour? Is this an issue with HttpClient? Am I missing something? Is this server configured in a weird way?

Update: The old, and deprecated WebRequest approach worked for a short time:

var res = WebRequest.Create("https://www.tripadvisor.de/");

res.Method = "GET";

var resp = res.GetResponse();

var content = await new StreamReader(resp.GetResponseStream()).ReadToEndAsync();

Console.WriteLine(content);

While it resulted in several 200, it appears, that later the server black-listed me because I was probably lacking indicators of a "real" user.

I understand, that this is some scraper / flood control mechanism. What I am missing is, why Invoke-WebRequest did not cause to be blocked.

Note: There is no http version of this page to test against :/

I've also tried ConfigureAwait(false) but from my understanding this cannot be the issue because other websites do work.