I tried to replicate the issue as follows:

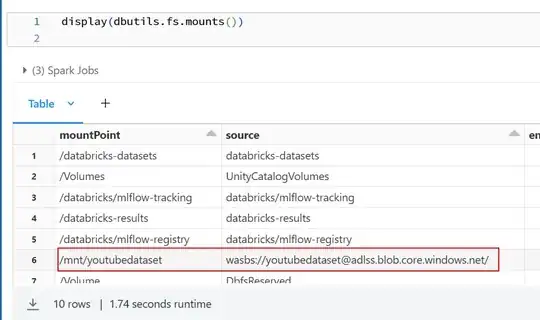

I have mounted my ADLS container using below code:

dbutils.fs.mount(

source="wasbs://<containerName>@<storageaccountName>.blob.core.windows.net/",

mount_point="/mnt/<mountName>",

extra_configs={

f"fs.azure.account.key.<storageaccountName>.blob.core.windows.net":"<Access-Key>"

}

)

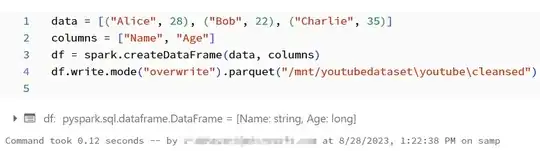

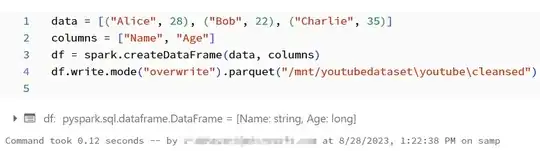

I tried to write dataframe into parquet file format into ADLS account using below code:

data = [("Alice", 28), ("Bob", 22), ("Charlie", 35)]

columns = ["Name", "Age"]

df = spark.createDataFrame(data, columns)

df.write.mode("overwrite").parquet("/mnt/youtubedataset\youtube\cleansed")

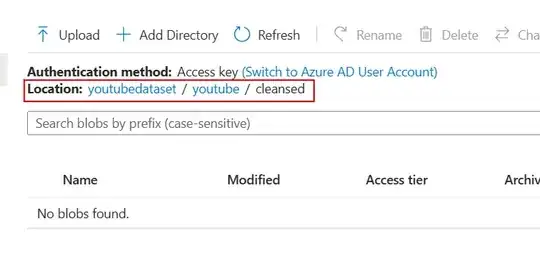

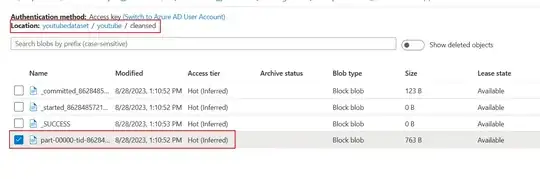

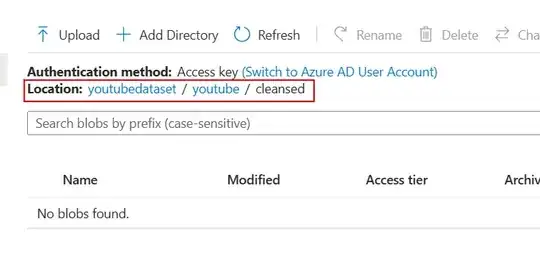

It wrote successfully as mentioned in above image but when I saw in storage account there is no files in it as mentioned below:

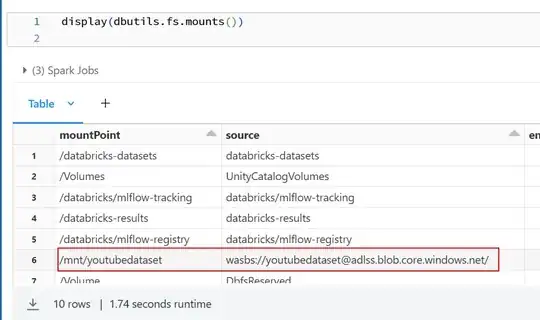

As per this the folders in dbfs:/mnt/ that are not actually mounted volumes but just simple folders. That's why I have checked the location of my mount point using display(dbutils.fs.mounts()) it located the mount location as storage account as mentioned below:

I have added forward slash to the storage path instead of back slash as mentioned below:

df.write.mode("overwrite").parquet("/mnt/youtubedataset/youtube/cleansed")

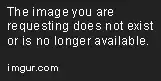

The file wrote successfully into ADLS storage account:

Once check your mount point is located to storage account.