I am writing a program that reads signal values from a file. My current problem is that I have a problem with the performance, the data cannot be read out fast enough.

The format of how the signal values (most of the time 32bit floating-point) are stored in the file is as follows:

(The file was loaded into main memory, also this is a binary file).

The basic format is as follows:

- I have a starting address at which the numbers are stored.

- There is always more than one block of data, depending on who created it.

- There is one offset per block, as mentioned in 2.

- I have given the number of elements I have to read out.

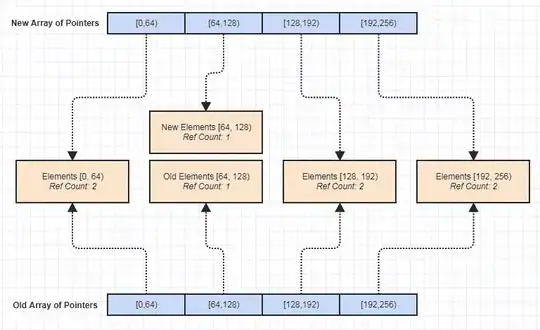

If you had to sketch it, it looks like this:

The blue blocks are the data I have to read out or write into an array, so that they are in a array. The spacing between the blue boxes is always the same, but it can be that the spacing is larger than a cacheline, which (I suspect) causes performance to suffer a lot.

My current code:

void extract_4byte_blocks(

size_t offset_of_entry,

size_t offset_between_elements,

char *arr,

size_t size_of_array,

char * start_of_data) {

char *next_block_start = (char *) start_of_data;

for (size_t i = 0; i < size_of_array; ++i) {

char *src = next_block_start + offset_of_entry;

char *dest = arr + i * 4;

next_block_start += offset_between_elements;

memcpy(dest, src, 4);

}

}

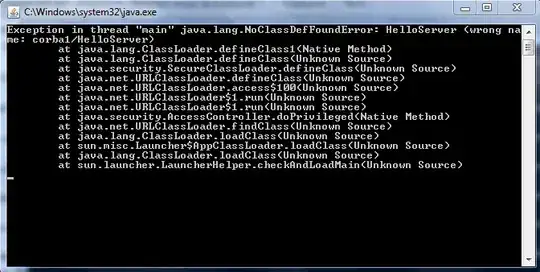

Current profiling data:

Cache:

Does anyone have a tip on what I can do to improve performance?

I fired up the profiler again and this time with -O3 and then I remembered why I stopped profiling with -O3. I can't read anything out and the time gain of 2 seconds is not so good that I can say I can live with it.The only thing that has changed is that the compiler has probably inlined everything or something. Which is how the duration gets into the calling function.

As you can see, the main problem is reading. I get the most cache misses, which is what I suspected. I have looked at the offset_between_elements. And as suspected, it can be very large. The offset_between_elements is (for those reads that are the most performance demanding) 2632, 2210, 109 and 366 bytes. Ergo, each read results in a cache miss.