I am using Databricks to run below code, but i see 3 jobs created for the same.

I am trying to understand, why spark created 3 jobs which more or less do the same task

myschema = StructType([

StructField("ID",StringType()),

StructField("Eventype",StringType())

])

mydata = [Row('1','4/12/2023'),Row('2','4/13/2023'),Row('3','4/14/2023'),Row('4','4/15/2023')]

df = spark.createDataFrame(mydata,schema=myschema)

df.show()

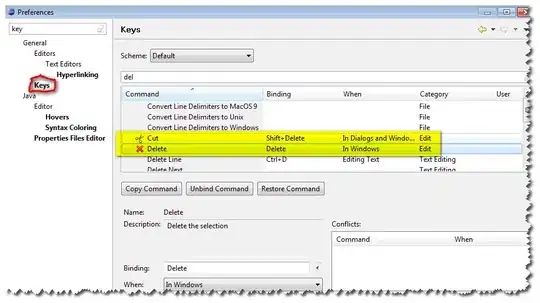

Below are the dag visualisation of all 3 jobs