We're trying to do the following in Mathematica - RMagick remove white background from image and make it transparent.

But with actual photos it ends up looking lousy (like having a halo around the image).

Here's what we've tried so far:

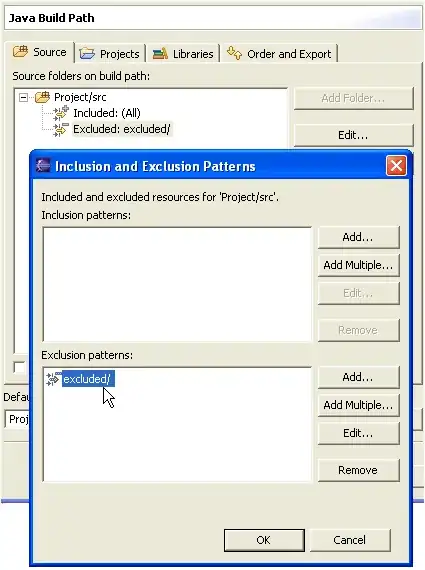

unground0[img_] := With[{mask = ChanVeseBinarize[img, TargetColor->{1.,1.,1.}]},

Rasterize[SetAlphaChannel[img, ImageApply[1-#&, mask]], Background->None]]]

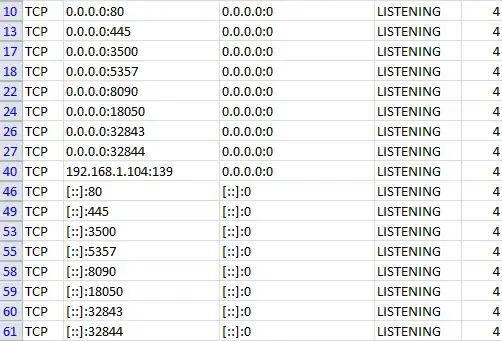

Here's an example of what that does.

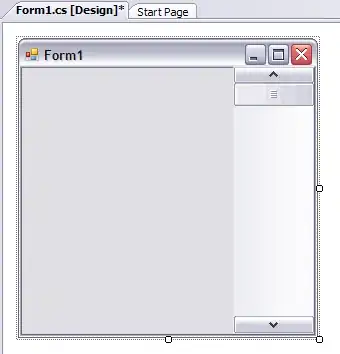

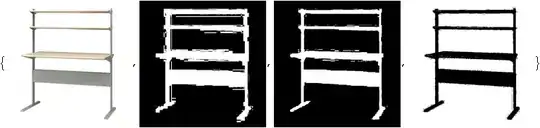

Original image:

Image with the white background replaced with no background (or, for demonstration purposes here, a pink background):

Any ideas for getting rid of that halo? Tweaking things like LevelPenalty, I can only get the halo to go away at the expense of losing some of the image.

EDIT: So I can compare solutions for the bounty, please structure your solution like above, namely a self-contained function named unground-something that takes an image and returns an image with transparent background.