Is it possible to load a file with 3 or 4 million lines in less than 1 second (1.000000)? One line contains one word. Words range in length from 1 - 17 (does that matter?).

My code is now:

List<string> LoadDictionary(string filename)

{

List<string> wordsDictionary = new List<string>();

Encoding enc = Encoding.GetEncoding(1250);//I need ę ą ć ł etc.

using (StreamReader r = new StreamReader(filename, enc))

{

string line = "";

while ((line = r.ReadLine()) != null)

{

if (line.Length > 2)

{

wordsDictionary.Add(line);

}

}

}

return wordsDictionary;

}

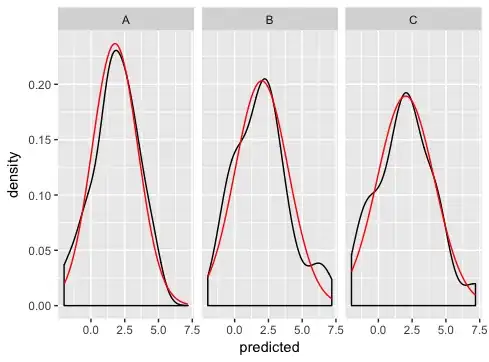

Results of timed execution:

How can I force the method to make it execute in half the time?