Here is how I did it using the goodFeaturesToTrack function:

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <iostream>

#include <vector>

using namespace cv;

using namespace std;

int main(int argc, char* argv[])

{

Mat laserCross = imread("laser_cross.png");

vector<Mat> laserChannels;

split(laserCross, laserChannels);

vector<Point2f> corners;

// only using the red channel since it contains the interesting bits...

goodFeaturesToTrack(laserChannels[2], corners, 1, 0.01, 10, Mat(), 3, false, 0.04);

circle(laserCross, corners[0], 3, Scalar(0, 255, 0), -1, 8, 0);

imshow("laser red", laserChannels[2]);

imshow("corner", laserCross);

waitKey();

return 0;

}

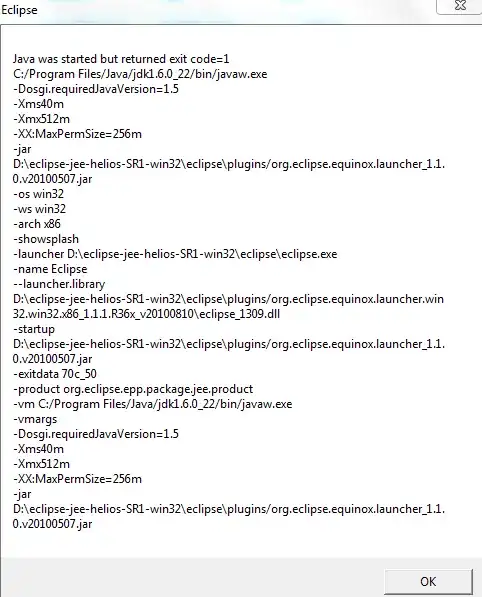

This results in the following output:

You could also look at using cornerSubPix to improve the answer accuracy.

EDIT : I was curious about implementing vasile's answer, so I sat down and tried it out. This looks to work quite well! Here is my implementation of what he described. For segmentation, I decided to use the Otsu method for automatic threshold selection. This will work well as long as you have high separation between the laser cross and the background, otherwise you might want to switch to an edge-detector like Canny. I did have to deal with some angle ambiguities for the vertical lines (i.e., 0 and 180 degrees), but the code seems to work (there may be a better way of dealing with the angle ambiguities).

Anyway, here is the code:

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <iostream>

#include <vector>

using namespace cv;

using namespace std;

Point2f computeIntersect(Vec2f line1, Vec2f line2);

vector<Point2f> lineToPointPair(Vec2f line);

bool acceptLinePair(Vec2f line1, Vec2f line2, float minTheta);

int main(int argc, char* argv[])

{

Mat laserCross = imread("laser_cross.png");

vector<Mat> laserChannels;

split(laserCross, laserChannels);

namedWindow("otsu", CV_WINDOW_NORMAL);

namedWindow("intersect", CV_WINDOW_NORMAL);

Mat otsu;

threshold(laserChannels[2], otsu, 0.0, 255.0, THRESH_OTSU);

imshow("otsu", otsu);

vector<Vec2f> lines;

HoughLines( otsu, lines, 1, CV_PI/180, 70, 0, 0 );

// compute the intersection from the lines detected...

int lineCount = 0;

Point2f intersect(0, 0);

for( size_t i = 0; i < lines.size(); i++ )

{

for(size_t j = 0; j < lines.size(); j++)

{

Vec2f line1 = lines[i];

Vec2f line2 = lines[j];

if(acceptLinePair(line1, line2, CV_PI / 4))

{

intersect += computeIntersect(line1, line2);

lineCount++;

}

}

}

if(lineCount > 0)

{

intersect.x /= (float)lineCount; intersect.y /= (float)lineCount;

Mat laserIntersect = laserCross.clone();

circle(laserIntersect, intersect, 1, Scalar(0, 255, 0), 3);

imshow("intersect", laserIntersect);

}

waitKey();

return 0;

}

bool acceptLinePair(Vec2f line1, Vec2f line2, float minTheta)

{

float theta1 = line1[1], theta2 = line2[1];

if(theta1 < minTheta)

{

theta1 += CV_PI; // dealing with 0 and 180 ambiguities...

}

if(theta2 < minTheta)

{

theta2 += CV_PI; // dealing with 0 and 180 ambiguities...

}

return abs(theta1 - theta2) > minTheta;

}

// the long nasty wikipedia line-intersection equation...bleh...

Point2f computeIntersect(Vec2f line1, Vec2f line2)

{

vector<Point2f> p1 = lineToPointPair(line1);

vector<Point2f> p2 = lineToPointPair(line2);

float denom = (p1[0].x - p1[1].x)*(p2[0].y - p2[1].y) - (p1[0].y - p1[1].y)*(p2[0].x - p2[1].x);

Point2f intersect(((p1[0].x*p1[1].y - p1[0].y*p1[1].x)*(p2[0].x - p2[1].x) -

(p1[0].x - p1[1].x)*(p2[0].x*p2[1].y - p2[0].y*p2[1].x)) / denom,

((p1[0].x*p1[1].y - p1[0].y*p1[1].x)*(p2[0].y - p2[1].y) -

(p1[0].y - p1[1].y)*(p2[0].x*p2[1].y - p2[0].y*p2[1].x)) / denom);

return intersect;

}

vector<Point2f> lineToPointPair(Vec2f line)

{

vector<Point2f> points;

float r = line[0], t = line[1];

double cos_t = cos(t), sin_t = sin(t);

double x0 = r*cos_t, y0 = r*sin_t;

double alpha = 1000;

points.push_back(Point2f(x0 + alpha*(-sin_t), y0 + alpha*cos_t));

points.push_back(Point2f(x0 - alpha*(-sin_t), y0 - alpha*cos_t));

return points;

}

Hope that helps!