Markov decision process (using value iteration) I can't get my head around. Resources use mathematical formulas way too complex for my competencies.

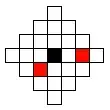

I want to use it on a 2D grid filled with walls (unattainable), coins (desirable) and enemies that move (must be avoided at all costs). The goal is to collect all coins without touching the enemies. I want to create an AI for the main player using a Markov decision process. It looks like (game-related aspect is not a concern, I want to understand Markov decision process in general):

A simplification of Markov decision process is a grid which holds in which direction we need to go (starting at a certain position on the grid) to collect the coins and avoid the enemies. Using Markov decision process terms, it creates a collection of states (the grid) which holds policies (the action to take: up, down, right, left) for a state (a position on the grid). The policies are determined by the "utility" values of each state, which themselves are calculated by evaluating how much getting there would be beneficial in the short and long term.

Is this correct? I'd like to know what the variables from the following equation represent in my situation:

From the book "Artificial Intelligence - A Modern Approach" by Russell & Norvig.

s would be a list of squares from the grid, a a specific action (up, down, right, left), but what about the rest? How would the reward and utility functions be implemented? It would be great if someone shows pseudo-code with similarities to my situation.