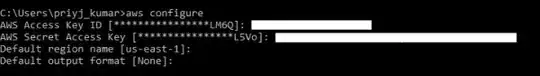

AWS CLI

See the "AWS CLI Command Reference" for more information.

AWS recently released their Command Line Tools, which work much like boto and can be installed using

sudo easy_install awscli

or

sudo pip install awscli

Once installed, you can then simply run:

aws s3 sync s3://<source_bucket> <local_destination>

For example:

aws s3 sync s3://mybucket .

will download all the objects in mybucket to the current directory.

And will output:

download: s3://mybucket/test.txt to test.txt

download: s3://mybucket/test2.txt to test2.txt

This will download all of your files using a one-way sync. It will not delete any existing files in your current directory unless you specify --delete, and it won't change or delete any files on S3.

You can also do S3 bucket to S3 bucket, or local to S3 bucket sync.

Check out the documentation and other examples.

Whereas the above example is how to download a full bucket, you can also download a folder recursively by performing

aws s3 cp s3://BUCKETNAME/PATH/TO/FOLDER LocalFolderName --recursive

This will instruct the CLI to download all files and folder keys recursively within the PATH/TO/FOLDER directory within the BUCKETNAME bucket.