There remains a great deal of confusion among the general public about what Turing Completeness is and what constitutes a Turing-Complete language. I wrote a blog post to try to clear up some of these misconceptions, which is to say I watched a TV show about super-intelligent computers and it presented a really useful analogy:

https://github.com/ubuvoid/writings/blob/main/miller/miller_is_sql.md

In this answer, I'll elaborate on the case of SQL.

We actually have enough information after a cursory at a glance to determine that SQL is Turing-Complete.

That's because it has affordances for branching choices ("CASE IF THEN"), and because even in SQL dialects that restrict recursion, it's trivial to write output to a table and re-read it in a subsequent query as though it were a memory register. Therefore, you can implement an interpreter for an assembly language in SQL without thinking very hard about it. If you want to demonstrate this with a minimum of labor, implement a single-instruction-set computer with an operation like 'subleq' (see https://en.wikipedia.org/wiki/One-instruction_set_computer ).

The fact that some implementations try to sandbox execution for SQL queries doesn't change the core functionality of the language, it just slaps a band-aid on the problem that well-meaning "convenience" features tend to cut through like a knife.

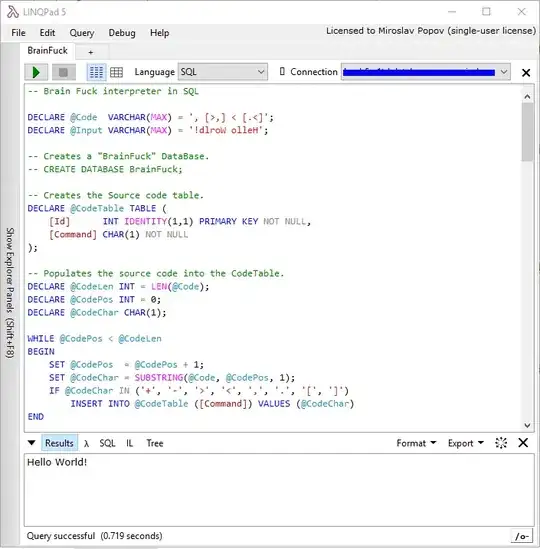

For a demonstration, consider the following:

- Say you have a restricted-execution "Non"-Turing Complete SQL interpreter, the kind that only allows you to run a single query or update operation at once and imposes limits on that query.

- Now, say you can invoke that interpreter from the shell.

- Finally, say you type the words "while true:" above the command to execute this SQL interpreter in a shell. Now you have Turing-complete execution.

Is this a meaningful distinction? I would argue that if the only difference you can articulate between your language and Turing Completeness is the fact that there's no while loop, then your framework for interpreting Turing Completeness is not particularly useful.

Non-Turing Complete languages in widespread use tend to describe "inert" data artifacts, and lack affordances for branching paths or explicit procedure invocations. Simply put, if a language has no "verbs" and/or no equivalent to "if then else", you can be sure it's Non-Turing Complete. Examples include interface definition languages (DIANA IDL, CORBA, Proto, Thrift, JSON Typedef, Coral, Ion, etc.), markup languages (XML, HTML, Markdown), data representation languages (JSON, text-format protocol buffers, ascii DIANA representation), and so on.

It's often useful to impose restrictions on Turing-complete execution at strategic parts of a software architecture to achieve separation of concerns, and non-TC languages offer a simple and robust means of making those guarantees.

A classic example is validating the schema of JSON payloads or other data artifacts in an HTTP or RPC request/response, a labor-intensive and error-prone task that can be automated with IDL's and code templates.

Keep circulating the artifacts.

(Edit 2022/04/08: Fun fact. Amazon carries the dubious distinction of having invented not one but two of the above-mentioned IDL's, while simultaneously using a third one that was invented at Facebook. Remember that the primary purpose of an interface definition language is to define interfaces, so I hope they have an IDL for converting one IDL to another. One colleague of mine compared Amazon's software infrastructure to the Paris sewers.)