Situation

I have created a Monster. The Applikentstein is a RAM Killer and I still cannot have a crystal clear grasp of how and why is it so rogue by design appearently. Basically, it is a computing application that launches test combinations for N Scenarios. Each test is conducted in a single dedicated thread, and involves reading large binary data, processing it, and dropping results to DB.

Data is first put into buffered queues while it is being read, (~100MB), and dequeued on the flow while it is processed. Chunks of data are tracked into arrays during processing. As of threading, I have a workers buffer queue with N workers, every time one finishes and dies a new one is launched via a Queue.

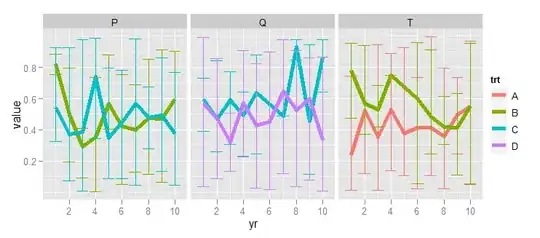

Below is what happens if I launch too many threads ("too many" depending on the System RAM)

The problem is that the RAM usage slope does not flatten at some point it just grows on and on, and then drops from time to time (A few Threads finishing, GC doing its housekeeping), but as soon as new Threads are launched it bounces back, higher and higher as time goes on (probably leaking here)

Through time, I have asked this question, that one, and this other one. Now is the time where I feel, the more I learn about this, the less I REALLY understand. (Sigh)

Questions

Now, I would like to have a clean explanations/resources on how exactly is the memory managed under .Net.

I know there is a lot of litterature/Blog articles on this wide topic. But the subject is so large I really do not know where to start without losing myself (and my company's precious time). I want to keep focused and objective-oriented.

Second, The "tilt" came when I tried to simulate/reproduce the steep slope above (created "naturally" by the RAM'osaurus Monster) in Lab conditions, in order to isolate this behavior and find a cure to it (i.e, monitoring memory used by the process and limiting it to X% of total memory, by stopping any new thread creation).

Why was it so hard to reproduce in Lab conditions (with some memory consuming multithreaded loop) what my rogue program seems to be doing so easily after all (putting the RAM down to its knees in the Task Manager) ? The GC seemed to clean up stuff regularly in the prototype, while my application seems to keep a lot of referenced stuff into RAM. How come ?

A Third puzzling thing is on the way caching works : Why is firefox using up to 1 Gb Memory and using the cache, while my App just blows the RAM ? Would it be possible that the data is used heavily/frequently by the running threads so it never gets cached ?

Tracks and Leads

I already used the VS profiler and identified a few bottlenecks (Large Array Sorting), used GCRoot, and WinDBG Console. Each time I sniped further leaks and fixed some of them (more or less, like lacking event unsubscriptions and so...)

I know my understanding of some basic notions about memory is still poor, that's a fact. That's why I am asking for a lead here. I am prepared to read sensible resources and learn more, and follow a few enlightened advice.