I have a table that has reached nearly 3 million records. While I appreciate this amount may not be considered large relative to RDBMS, I have noticed slow down in operations that deal with this table. I feel I can optimize or re-architect it.

This is part of the database for the PocketBracket March Madness App. Essentially the table stores meta data for a one to many relationship (Brackets has many Bracket Picks). The twist is the demand on the table is different at times. For example, there is a short period (two weeks) where the table is performing mostly writes. But for the rest of the year it is mostly reads. Furthermore, a far majority of the records are not accessed.

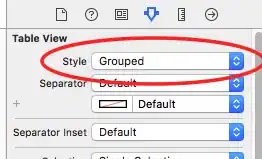

Here is a screenshot of the current structure:

With that, here are some thoughts I have:

- Place the old records in a separate table. Decreases the amount of records, but would require code modifications.

- Denormalize the table so the models are 1-1 (i.e. condense all bracket picks into a single serialized column). Decreases the amount of records, but would require code modifications.

- Swap the table's engine and or indexes during demand periods (i.e. InnoDB/MyISAM).

- Something I haven't thought of...

I would appreciate your direction. In the end, I am fine with code changes, I just want to ensure I'm re-architecting in the right direction.