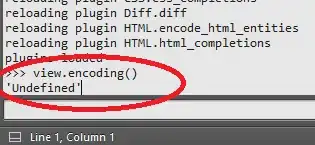

I'm trying to get a Python 3 program to do some manipulations with a text file filled with information. However, when trying to read the file I get the following error:

Traceback (most recent call last):

File "SCRIPT LOCATION", line NUMBER, in <module>

text = file.read()

File "C:\Python31\lib\encodings\cp1252.py", line 23, in decode

return codecs.charmap_decode(input,self.errors,decoding_table)[0]

UnicodeDecodeError: 'charmap' codec can't decode byte 0x90 in position 2907500: character maps to `<undefined>`

After reading this Q&A, see How to determine the encoding of text if you need help figuring out the encoding of the file you are trying to open.