We use a loading Google Guava LoadingCache for bitmaps in an Android application. In the application I am running a drawing Thread, that paints the bitmaps in the cache to a Canvas. If a specific bitmap is not in the cache, it does not get drawn so that no loading will ever block the drawing Thread.

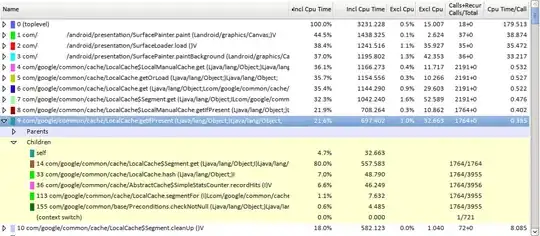

However, the painting results in visual stuttering and the frames per second rate is not how we would like it. I nailed it down to the getIfPresent() method of the cache. That alone takes over 20% of the applications total CPU time. In getIfPresent() LocalCache$Segment.get() takes over 80% of the time:

Bear in mind, this is only a lookup of an already present bitmap. There will never happen a load in get(). I figured there would be a bookkeeping overhead in get() for the LRU queue that decides which eviction takes place if the segment is full. But this is at least an order of magnitude slower of what a Key-Lookup in LRU-LinkedHashmap.get() would give me.

We use a cache to get fast lookups if an element is in the cache, if the lookup is slow, there is no point in caching it. I also tried getAllPresent(a) and asMap() but it gives equal performance.

Library version is: guava-11.0.1.jar

LoadingCache is defined as follows:

LoadingCache<TileKey, Bitmap> tiles = CacheBuilder.newBuilder().maximumSize(100).build(new CacheLoader<TileKey,Bitmap>() {

@Override

public Bitmap load(TileKey tileKey) {

System.out.println("Loading in " + Thread.currentThread().getName() + " "

+ tileKey.x + "-" + tileKey.y);

final File[][] tileFiles = surfaceState.mapFile.getBuilding()

.getFloors().get(tileKey.floorid)

.getBackground(tileKey.zoomid).getTileFiles();

String tilePath = tileFiles[tileKey.y][tileKey.x].getAbsolutePath();

Options options = new BitmapFactory.Options();

options.inPreferredConfig = Bitmap.Config.RGB_565;

return BitmapFactory.decodeFile(tilePath, options);

}

});

My questions are:

- Do I use it wrong?

- Is its implementation unsuitable for Android?

- Did i miss a configuration option?

- Is this a known issue with the Cache that's being worked on?

Update:

After about 100 frames painted the CacheStats are:

I/System.out( 6989): CacheStats{hitCount=11992, missCount=97,

loadSuccessCount=77, loadExceptionCount=0, totalLoadTime=1402984624, evictionCount=0}

After that missCount stays basicly the same as hitCount increments. In this case the cache is big enough for loads to happen sparsely, but getIfPresent() is slow nontheless.