Your render code is extremely inefficient because it will render 44100 pixels for each second of audio. You want to preferably render at most the viewport width with a reduced data set.

The per pixel sample range needed to fit the waveform in the viewport can be calculated with audioDurationSeconds * samplerate / viewPortWidthPx. So for a viewport of 1000px and an audio file of 2 second at 44100 samplerate the samples per pixel = (2 * 44100) / 1000 = ~88.

For each pixel on screen you take the min and max value from that sample range, you use this data to draw the waveform.

Here is an example algorithm that does this but allows you to give the samples per pixel as argument as well as a scroll position to allow for virtual scroll and zooming. It includes a resolution parameter you can tweak for performance, this indicates how many samples it should take per pixel sample range:

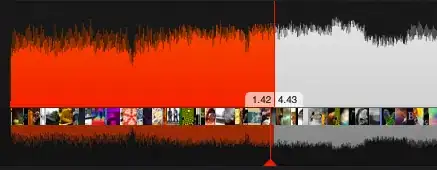

Drawing zoomable audio waveform timeline in Javascript

The draw method there is similar to yours, in order to smooth it you need to use lineTo instead of fillRect.This difference shouldn't actually be that huge, I think you might be forgetting to set the width and height attributes on the canvas. Setting this in css causes for blurry drawing, you need to set the attributes.

let drawWaveform = function(canvas, drawData, width, height) {

let ctx = canvas.getContext('2d');

let drawHeight = height / 2;

// clear canvas incase there is already something drawn

ctx.clearRect(0, 0, width, height);

ctx.beginPath();

ctx.moveTo(0, drawHeight);

for(let i = 0; i < width; i++) {

// transform data points to pixel height and move to centre

let minPixel = drawData[i][0] * drawHeigth + drawHeight;

ctx.lineTo(i, minPixel);

}

ctx.lineTo(width, drawHeight);

ctx.moveTo(0, drawHeight);

for(let i = 0; i < width; i++) {

// transform data points to pixel height and move to centre

let maxPixel = drawData[i][1] * drawHeigth + drawHeight;

ctx.lineTo(i, maxPixel);

}

ctx.lineTo(width, drawHeight);

ctx.closePath();

ctx.fill(); // can do ctx.stroke() for an outline of the waveform

}