I have a VC++ project in Visual Studio 2008.

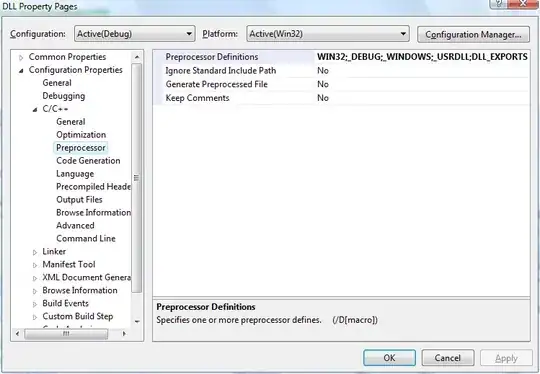

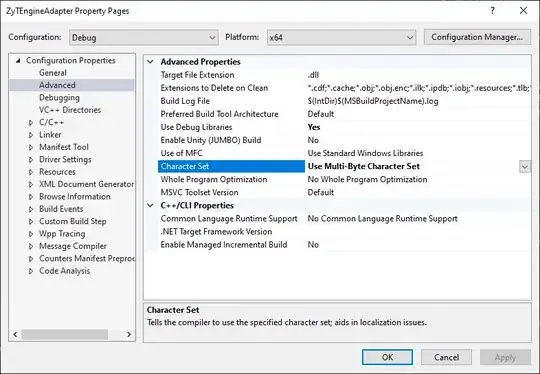

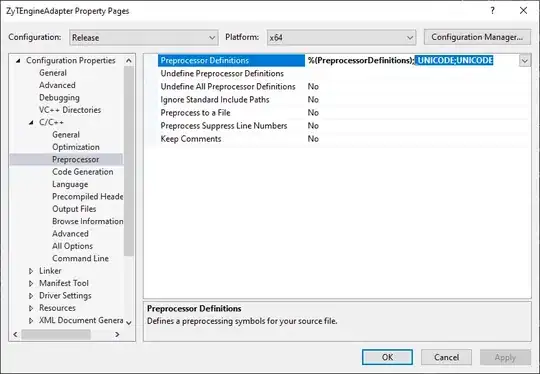

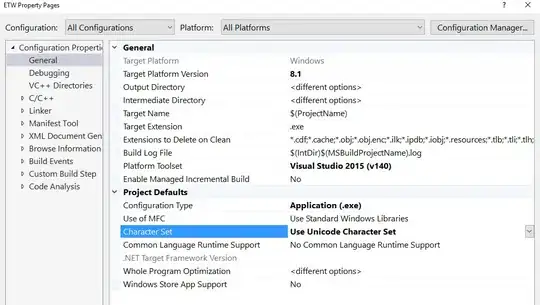

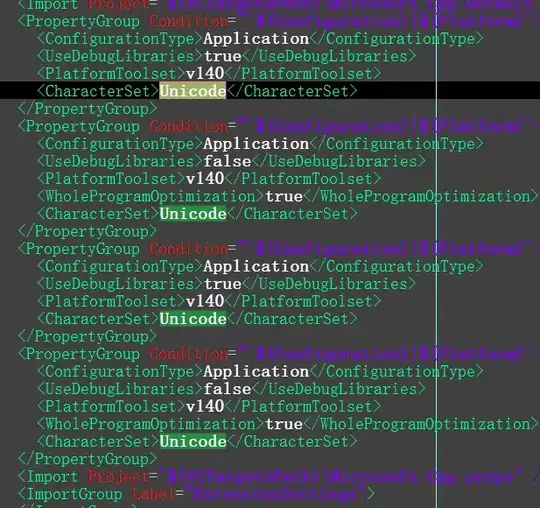

It is defining the symbols for unicode on the compiler command line (/D "_UNICODE" /D "UNICODE"), even though I do not have this symbol turned on in the preprocessor section for the project.

As a result I am compiling against the Unicode versions of all the Win32 library functions, as opposed to the ANSI ones. For example in WinBase.h, there is:

#ifdef UNICODE

#define CreateFile CreateFileW

#else

#define CreateFile CreateFileA

#endif // !UNICODE

Where is the unicode being turned on in the VC++ project, how can I turn it off?