A few of the solutions here reference the time taken for various "is even" operations, specifically n % 2 vs n & 1, without systematically checking how this varies with the size of n, which turns out to be predictive of speed.

The short answer is that if you're using reasonably sized numbers, normally < 1e9, it doesn't make much difference. If you're using larger numbers then you probably want to be using the bitwise operator.

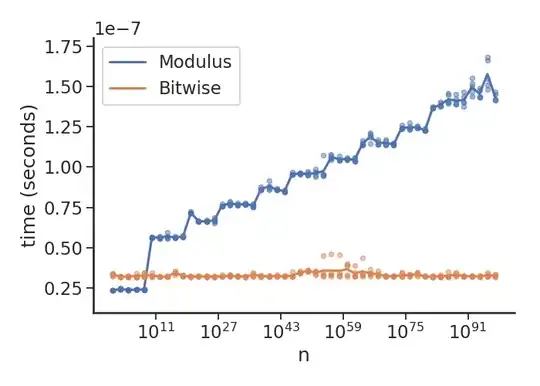

Here's a plot to demonstrate what's going on (with Python 3.7.3, under Linux 5.1.2):

Basically as you hit "arbitrary precision" longs things get progressively slower for modulus, while remaining constant for the bitwise op. Also, note the 10**-7 multiplier on this, i.e. I can do ~30 million (small integer) checks per second.

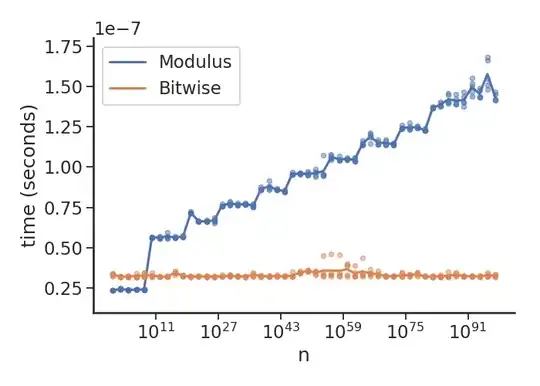

Here's the same plot for Python 2.7.16:

which shows the optimisation that's gone into newer versions of Python.

I've only got these versions of Python on my machine, but could rerun for other versions of there's interest. There are 51 ns between 1 and 1e100 (evenly spaced on a log scale), for each point I do the equivalent of:

timeit('n % 2', f'n={n}', number=niter)

where niter is calculated to make timeit take ~0.1 seconds, and this is repeated 5 times. The slightly awkward handling of n is to make sure we're not also benchmarking global variable lookup, which is slower than local variables. The mean of these values are used to draw the line, and the individual values are drawn as points.