I've an issue on a customer site where lines containing words like "HabitaþÒo" get mangled on output. I'm processing a text file (pulling out selected lines and writing them to another file)

For diagnosis I've boiled the problem down to a file with just that bad word.

The original file contains no BOM but .net chooses to read it as UTF-8.

When read and written the word ends up looking like this "Habita��o".

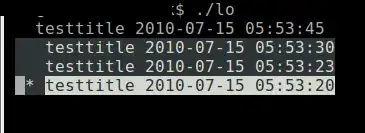

A hex dump of the BadWord.txt file looks like this

Copying the file with this code

using (var reader = new StreamReader(@"C:\BadWord.txt"))

using (var writer = new StreamWriter(@"C:\BadWordReadAndWritten.txt"))

writer.WriteLine(reader.ReadLine());

. . . gives . . .

Preserving the readers encoding doesn't do anything either

using (var reader = new StreamReader(@"C:\BadWord.txt"))

using (var writer = new StreamWriter(@"C:\BadWordReadAndWritten_PreseveEncoding.txt", false, reader.CurrentEncoding))

writer.WriteLine(reader.ReadLine());

. . . gives . . .

Any ideas what's going on here, how can I process this file and preserve the original text?