I have this C struct: (representing an IP datagram)

struct ip_dgram

{

unsigned int ver : 4;

unsigned int hlen : 4;

unsigned int stype : 8;

unsigned int tlen : 16;

unsigned int fid : 16;

unsigned int flags : 3;

unsigned int foff : 13;

unsigned int ttl : 8;

unsigned int pcol : 8;

unsigned int chksm : 16;

unsigned int src : 32;

unsigned int des : 32;

unsigned char opt[40];

};

I'm assigning values to it, and then printing its memory layout in 16-bit words like this:

//prints 16 bits at a time

void print_dgram(struct ip_dgram dgram)

{

unsigned short int* ptr = (unsigned short int*)&dgram;

int i,j;

//print only 10 words

for(i=0 ; i<10 ; i++)

{

for(j=15 ; j>=0 ; j--)

{

if( (*ptr) & (1<<j) ) printf("1");

else printf("0");

if(j%8==0)printf(" ");

}

ptr++;

printf("\n");

}

}

int main()

{

struct ip_dgram dgram;

dgram.ver = 4;

dgram.hlen = 5;

dgram.stype = 0;

dgram.tlen = 28;

dgram.fid = 1;

dgram.flags = 0;

dgram.foff = 0;

dgram.ttl = 4;

dgram.pcol = 17;

dgram.chksm = 0;

dgram.src = (unsigned int)htonl(inet_addr("10.12.14.5"));

dgram.des = (unsigned int)htonl(inet_addr("12.6.7.9"));

print_dgram(dgram);

return 0;

}

I get this output:

00000000 01010100

00000000 00011100

00000000 00000001

00000000 00000000

00010001 00000100

00000000 00000000

00001110 00000101

00001010 00001100

00000111 00001001

00001100 00000110

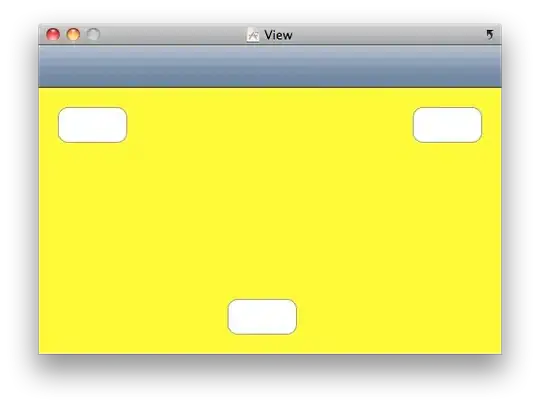

But I expect this:

The output is partially correct; somewhere, the bytes and nibbles seem to be interchanged. Is there some endianness issue here? Are bit-fields not good for this purpose? I really don't know. Any help? Thanks in advance!