The short way

If the number sampled is much less than the population, just sample, check if it's been chosen and repeat while so. This might sound silly, but you've got an exponentially decaying possibility of choosing the same number, so it's much faster than O(n) if you've got even a small percentage unchosen.

The long way

Python uses a Mersenne Twister as its PRNG, which is goodadequate. We can use something else entirely to be able to generate non-overlapping numbers in a predictable manner.

Here's the secret:

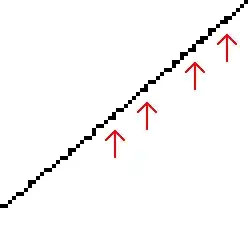

Quadratic residues, x² mod p, are unique when 2x < p and p is a prime.

If you "flip" the residue, p - (x² % p), given this time also that p = 3 mod 4, the results will be the remaining spaces.

This isn't a very convincing numeric spread, so you can increase the power, add some fudge constants and then the distribution is pretty good.

First we need to generate primes:

from itertools import count

from math import ceil

from random import randrange

def modprime_at_least(number):

if number <= 2:

return 2

number = (number // 4 * 4) + 3

for number in count(number, 4):

if all(number % factor for factor in range(3, ceil(number ** 0.5)+1, 2)):

return number

You might worry about the cost of generating the primes. For 10⁶ elements this takes a tenth of a millisecond. Running [None] * 10**6 takes longer than that, and since it's only calculated once, this isn't a real problem.

Further, the algorithm doesn't need an exact value for the prime; is only needs something that is at most a constant factor larger than the input number. This is possible by saving a list of values and searching them. If you do a linear scan, that is O(log number) and if you do a binary search it is O(log number of cached primes). In fact, if you use galloping you can bring this down to O(log log number), which is basically constant (log log googol = 2).

Then we implement the generator

def sample_generator(up_to):

prime = modprime_at_least(up_to+1)

# Fudge to make it less predictable

fudge_power = 2**randrange(7, 11)

fudge_constant = randrange(prime//2, prime)

fudge_factor = randrange(prime//2, prime)

def permute(x):

permuted = pow(x, fudge_power, prime)

return permuted if 2*x <= prime else prime - permuted

for x in range(prime):

res = (permute(x) + fudge_constant) % prime

res = permute((res * fudge_factor) % prime)

if res < up_to:

yield res

And check that it works:

set(sample_generator(10000)) ^ set(range(10000))

#>>> set()

Now, the lovely thing about this is that if you ignore the primacy test, which is approximately O(√n) where n is the number of elements, this algorithm has time complexity O(k), where k is the sample sizeit's and O(1) memory usage! Technically this is O(√n + k), but practically it is O(k).

Requirements:

You do not require a proven PRNG. This PRNG is far better then linear congruential generator (which is popular; Java uses it) but it's not as proven as a Mersenne Twister.

You do not first generate any items with a different function. This avoids duplicates through mathematics, not checks. Next section I show how to remove this restriction.

The short method must be insufficient (k must approach n). If k is only half n, just go with my original suggestion.

Advantages:

Extreme memory savings. This takes constant memory... not even O(k)!

Constant time to generate the next item. This is actually rather fast in constant terms, too: it's not as fast as the built-in Mersenne Twister but it's within a factor of 2.

Coolness.

To remove this requirement:

You do not first generate any items with a different function. This avoids duplicates through mathematics, not checks.

I have made the best possible algorithm in time and space complexity, which is a simple extension of my previous generator.

Here's the rundown (n is the length of the pool of numbers, k is the number of "foreign" keys):

Initialisation time O(√n); O(log log n) for all reasonable inputs

This is the only factor of my algorithm that technically isn't perfect with regards to algorithmic complexity, thanks to the O(√n) cost. In reality this won't be problematic because precalculation brings it down to O(log log n) which is immeasurably close to constant time.

The cost is amortized free if you exhaust the iterable by any fixed percentage.

This is not a practical problem.

Amortized O(1) key generation time

Obviously this cannot be improved upon.

Worst-case O(k) key generation time

If you have keys generated from the outside, with only the requirement that it must not be a key that this generator has already produced, these are to be called "foreign keys". Foreign keys are assumed to be totally random. As such, any function that is able to select items from the pool can do so.

Because there can be any number of foreign keys and they can be totally random, the worst case for a perfect algorithm is O(k).

Worst-case space complexity O(k)

If the foreign keys are assumed totally independent, each represents a distinct item of information. Hence all keys must be stored. The algorithm happens to discard keys whenever it sees one, so the memory cost will clear over the lifetime of the generator.

The algorithm

Well, it's both of my algorithms. It's actually quite simple:

def sample_generator(up_to, previously_chosen=set(), *, prune=True):

prime = modprime_at_least(up_to+1)

# Fudge to make it less predictable

fudge_power = 2**randrange(7, 11)

fudge_constant = randrange(prime//2, prime)

fudge_factor = randrange(prime//2, prime)

def permute(x):

permuted = pow(x, fudge_power, prime)

return permuted if 2*x <= prime else prime - permuted

for x in range(prime):

res = (permute(x) + fudge_constant) % prime

res = permute((res * fudge_factor) % prime)

if res in previously_chosen:

if prune:

previously_chosen.remove(res)

elif res < up_to:

yield res

The change is as simple as adding:

if res in previously_chosen:

previously_chosen.remove(res)

You can add to previously_chosen at any time by adding to the set that you passed in. In fact, you can also remove from the set in order to add back to the potential pool, although this will only work if sample_generator has not yet yielded it or skipped it with prune=False.

So there is is. It's easy to see that it fulfils all of the requirements, and it's easy to see that the requirements are absolute. Note that if you don't have a set, it still meets its worst cases by converting the input to a set, although it increases overhead.

Testing the RNG's quality

I became curious how good this PRNG actually is, statistically speaking.

Some quick searches lead me to create these three tests, which all seem to show good results!

Firstly, some random numbers:

N = 1000000

my_gen = list(sample_generator(N))

target = list(range(N))

random.shuffle(target)

control = list(range(N))

random.shuffle(control)

These are "shuffled" lists of 10⁶ numbers from 0 to 10⁶-1, one using our fun fudged PRNG, the other using a Mersenne Twister as a baseline. The third is the control.

Here's a test which looks at the average distance between two random numbers along the line. The differences are compared with the control:

from collections import Counter

def birthdat_calc(randoms):

return Counter(abs(r1-r2)//10000 for r1, r2 in zip(randoms, randoms[1:]))

def birthday_compare(randoms_1, randoms_2):

birthday_1 = sorted(birthdat_calc(randoms_1).items())

birthday_2 = sorted(birthdat_calc(randoms_2).items())

return sum(abs(n1 - n2) for (i1, n1), (i2, n2) in zip(birthday_1, birthday_2))

print(birthday_compare(my_gen, target), birthday_compare(control, target))

#>>> 9514 10136

This is less than the variance of each.

Here's a test which takes 5 numbers in turn and sees what order the elements are in. They should be evenly distributed between all 120 possible orders.

def permutations_calc(randoms):

permutations = Counter()

for items in zip(*[iter(randoms)]*5):

sorteditems = sorted(items)

permutations[tuple(sorteditems.index(item) for item in items)] += 1

return permutations

def permutations_compare(randoms_1, randoms_2):

permutations_1 = permutations_calc(randoms_1)

permutations_2 = permutations_calc(randoms_2)

keys = sorted(permutations_1.keys() | permutations_2.keys())

return sum(abs(permutations_1[key] - permutations_2[key]) for key in keys)

print(permutations_compare(my_gen, target), permutations_compare(control, target))

#>>> 5324 5368

This is again less than the variance of each.

Here's a test that sees how long "runs" are, aka. sections of consecutive increases or decreases.

def runs_calc(randoms):

runs = Counter()

run = 0

for item in randoms:

if run == 0:

run = 1

elif run == 1:

run = 2

increasing = item > last

else:

if (item > last) == increasing:

run += 1

else:

runs[run] += 1

run = 0

last = item

return runs

def runs_compare(randoms_1, randoms_2):

runs_1 = runs_calc(randoms_1)

runs_2 = runs_calc(randoms_2)

keys = sorted(runs_1.keys() | runs_2.keys())

return sum(abs(runs_1[key] - runs_2[key]) for key in keys)

print(runs_compare(my_gen, target), runs_compare(control, target))

#>>> 1270 975

The variance here is very large, and over several executions I have seems an even-ish spread of both. As such, this test is passed.

A Linear Congruential Generator was mentioned to me, as possibly "more fruitful". I have made a badly implemented LCG of my own, to see whether this is an accurate statement.

LCGs, AFAICT, are like normal generators in that they're not made to be cyclic. Therefore most references I looked at, aka. Wikipedia, covered only what defines the period, not how to make a strong LCG of a specific period. This may have affected results.

Here goes:

from operator import mul

from functools import reduce

# Credit http://stackoverflow.com/a/16996439/1763356

# Meta: Also Tobias Kienzler seems to have credit for my

# edit to the post, what's up with that?

def factors(n):

d = 2

while d**2 <= n:

while not n % d:

yield d

n //= d

d += 1

if n > 1:

yield n

def sample_generator3(up_to):

for modulier in count(up_to):

modulier_factors = set(factors(modulier))

multiplier = reduce(mul, modulier_factors)

if not modulier % 4:

multiplier *= 2

if multiplier < modulier - 1:

multiplier += 1

break

x = randrange(0, up_to)

fudge_constant = random.randrange(0, modulier)

for modfact in modulier_factors:

while not fudge_constant % modfact:

fudge_constant //= modfact

for _ in range(modulier):

if x < up_to:

yield x

x = (x * multiplier + fudge_constant) % modulier

We no longer check for primes, but we do need to do some odd things with factors.

modulier ≥ up_to > multiplier, fudge_constant > 0a - 1 must be divisible by every factor in modulier...- ...whereas

fudge_constant must be coprime with modulier

Note that these aren't rules for a LCG but a LCG with full period, which is obviously equal to the modulier.

I did it as such:

- Try every

modulier at least up_to, stopping when the conditions are satisfied

- Make a set of its factors,

- Let

multiplier be the product of

- If

multiplier is not less than modulier, continue with the next modulier

- Let

fudge_constant be a number less that modulier, chosen randomly

- Remove the factors from

fudge_constant that are in

This is not a very good way of generating it, but I don't see why it would ever impinge the quality of the numbers, aside from the fact that low fudge_constants and multiplier are more common than a perfect generator for these might make.

Anyhow, the results are appalling:

print(birthday_compare(lcg, target), birthday_compare(control, target))

#>>> 22532 10650

print(permutations_compare(lcg, target), permutations_compare(control, target))

#>>> 17968 5820

print(runs_compare(lcg, target), runs_compare(control, target))

#>>> 8320 662

In summary, my RNG is good and a linear congruential generator is not. Considering that Java gets away with a linear congruential generator (although it only uses the lower bits), I would expect my version to be more than sufficient.