I am working on performance of paralle algorithm on Multicore machine. I did an experiment on Matrix Multiplication with loop reordering (ikj) technique.

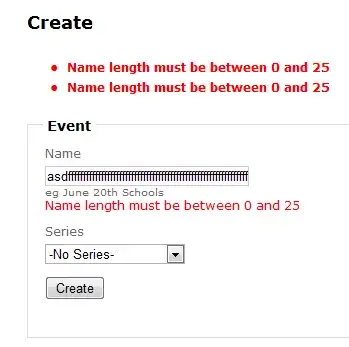

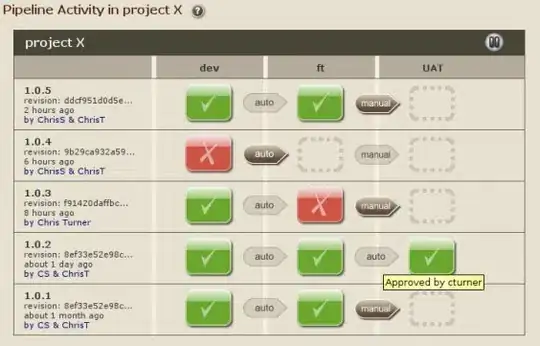

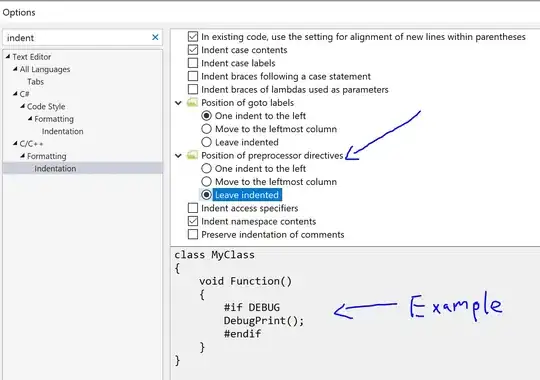

The serial execution result is as in images below.L1 data cache hit for loop order ikj and kij for all size of nXn matrix is near 100% (Image 1 box number 1 & 2) and as you can see loop order ikj in size 2048 and 4096 suddenly L2 data cach hit decrease by %50 (Image 2 box number 1 & 2) also in L2 instruction cache hit the same is true. Incase where L1 data cache hit for these 2 size are like other sizes(256,512,1024) is about %100. I could not find any resonable reason for this slope in both Instruction and data cache hit. could any one give me clue on how to find the reason(s)?

do you think that L2 unified cache has any effect on exacerbating the problem? But still what causes this reduction what characteristic of algorithm and performance should I profile to find reason.

experimental machine is Intel e4500 with 2Mb L2 cache,cache line 64, os is fedora 17 x64 with gcc 4.7 -o no compiler optimization

Abridged & Complete Question?

my problem is that why sudden decrease of about 50% in both L2 data and instruction cache happens in only ikj & kij algorithm as it's boxed and numbered 1 & 2 in images, but not in other loop variation?

*Image 1*

*Image 2*

*Image 3*

*Image 4*

*Image 5*

Despite the aformentioned problem there is no increase in timing of ikj&kij algorithm. But also is faster than others.

ikj and kij algorithm are two variation of loop reordering technique/

kij Algorithm

For (k=0;k<n;k++)

For(i=0;i<n;i++){

r=A[i][k];

For (j=0;j<n;j++)

C[i][j]+=r*B[k][j]

}

ikj Algorithm

For (i=0;i<n;i++)

For(k=0;k<n;k++){

r=A[i][k];

For (j=0;j<n;j++)

C[i][j]+=r*B[k][j]

}

thanks