Problem Statement

We have an array of values, vals and runlengths, runlens:

vals = [1,3,2,5]

runlens = [2,2,1,3]

We are needed to repeat each element in vals times each corresponding element in runlens. Thus, the final output would be:

output = [1,1,3,3,2,5,5,5]

Prospective Approach

One of the fastest tools with MATLAB is cumsum and is very useful when dealing with vectorizing problems that work on irregular patterns. In the stated problem, the irregularity comes with the different elements in runlens.

Now, to exploit cumsum, we need to do two things here: Initialize an array of zeros and place "appropriate" values at "key" positions over the zeros array, such that after "cumsum" is applied, we would end up with a final array of repeated vals of runlens times.

Steps: Let's number the above mentioned steps to give the prospective approach an easier perspective:

1) Initialize zeros array: What must be the length? Since we are repeating runlens times, the length of the zeros array must be the summation of all runlens.

2) Find key positions/indices: Now these key positions are places along the zeros array where each element from vals start to repeat.

Thus, for runlens = [2,2,1,3], the key positions mapped onto the zeros array would be:

[X 0 X 0 X X 0 0] % where X's are those key positions.

3) Find appropriate values: The final nail to be hammered before using cumsum would be to put "appropriate" values into those key positions. Now, since we would be doing cumsum soon after, if you think closely, you would need a differentiated version of values with diff, so that cumsum on those would bring back our values. Since these differentiated values would be placed on a zeros array at places separated by the runlens distances, after using cumsum we would have each vals element repeated runlens times as the final output.

Solution Code

Here's the implementation stitching up all the above mentioned steps -

% Calculate cumsumed values of runLengths.

% We would need this to initialize zeros array and find key positions later on.

clens = cumsum(runlens)

% Initalize zeros array

array = zeros(1,(clens(end)))

% Find key positions/indices

key_pos = [1 clens(1:end-1)+1]

% Find appropriate values

app_vals = diff([0 vals])

% Map app_values at key_pos on array

array(pos) = app_vals

% cumsum array for final output

output = cumsum(array)

Pre-allocation Hack

As could be seen that the above listed code uses pre-allocation with zeros. Now, according to this UNDOCUMENTED MATLAB blog on faster pre-allocation, one can achieve much faster pre-allocation with -

array(clens(end)) = 0; % instead of array = zeros(1,(clens(end)))

Wrapping up: Function Code

To wrap up everything, we would have a compact function code to achieve this run-length decoding like so -

function out = rle_cumsum_diff(vals,runlens)

clens = cumsum(runlens);

idx(clens(end))=0;

idx([1 clens(1:end-1)+1]) = diff([0 vals]);

out = cumsum(idx);

return;

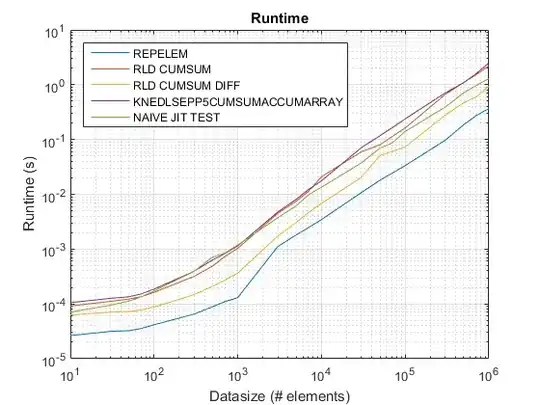

Benchmarking

Benchmarking Code

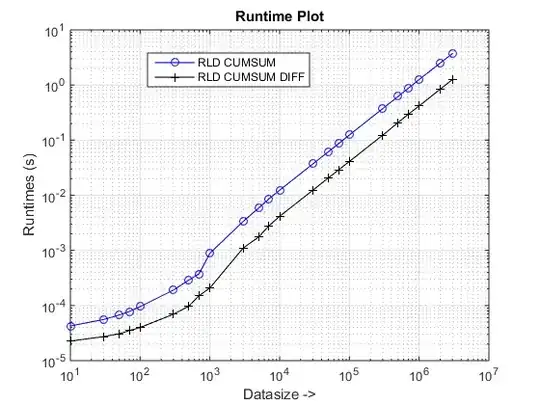

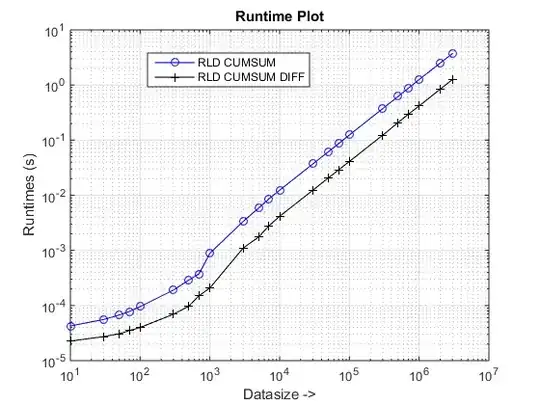

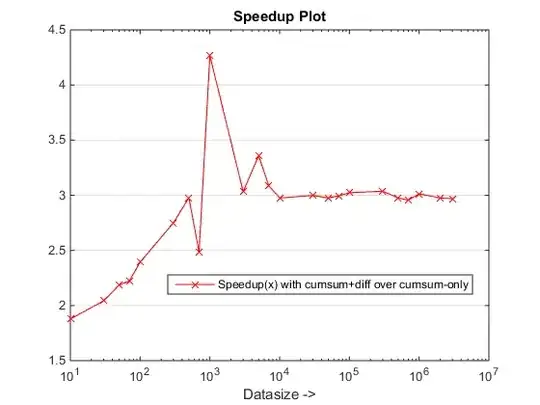

Listed next is the benchmarking code to compare runtimes and speedups for the stated cumsum+diff approach in this post over the other cumsum-only based approach on MATLAB 2014B-

datasizes = [reshape(linspace(10,70,4).'*10.^(0:4),1,[]) 10^6 2*10^6]; %

fcns = {'rld_cumsum','rld_cumsum_diff'}; % approaches to be benchmarked

for k1 = 1:numel(datasizes)

n = datasizes(k1); % Create random inputs

vals = randi(200,1,n);

runs = [5000 randi(200,1,n-1)]; % 5000 acts as an aberration

for k2 = 1:numel(fcns) % Time approaches

tsec(k2,k1) = timeit(@() feval(fcns{k2}, vals,runs), 1);

end

end

figure, % Plot runtimes

loglog(datasizes,tsec(1,:),'-bo'), hold on

loglog(datasizes,tsec(2,:),'-k+')

set(gca,'xgrid','on'),set(gca,'ygrid','on'),

xlabel('Datasize ->'), ylabel('Runtimes (s)')

legend(upper(strrep(fcns,'_',' '))),title('Runtime Plot')

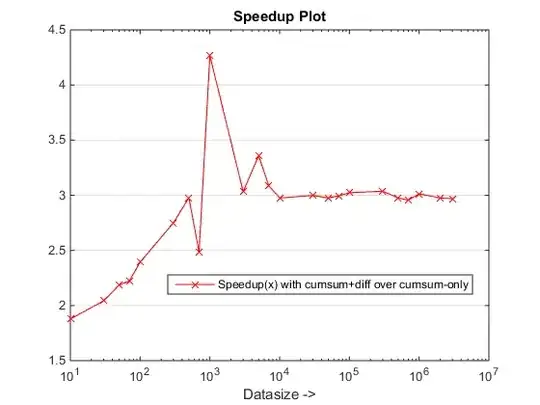

figure, % Plot speedups

semilogx(datasizes,tsec(1,:)./tsec(2,:),'-rx')

set(gca,'ygrid','on'), xlabel('Datasize ->')

legend('Speedup(x) with cumsum+diff over cumsum-only'),title('Speedup Plot')

Associated function code for rld_cumsum.m:

function out = rld_cumsum(vals,runlens)

index = zeros(1,sum(runlens));

index([1 cumsum(runlens(1:end-1))+1]) = 1;

out = vals(cumsum(index));

return;

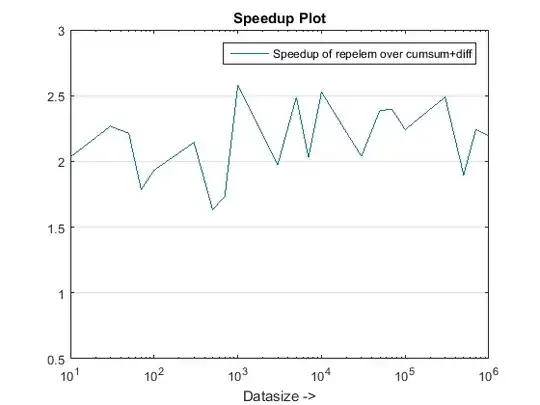

Runtime and Speedup Plots

Conclusions

The proposed approach seems to be giving us a noticeable speedup over the cumsum-only approach, which is about 3x!

Why is this new cumsum+diff based approach better than the previous cumsum-only approach?

Well, the essence of the reason lies at the final step of the cumsum-only approach that needs to map the "cumsumed" values into vals. In the new cumsum+diff based approach, we are doing diff(vals) instead for which MATLAB is processing only n elements (where n is the number of runLengths) as compared to the mapping of sum(runLengths) number of elements for the cumsum-only approach and this number must be many times more than n and therefore the noticeable speedup with this new approach!