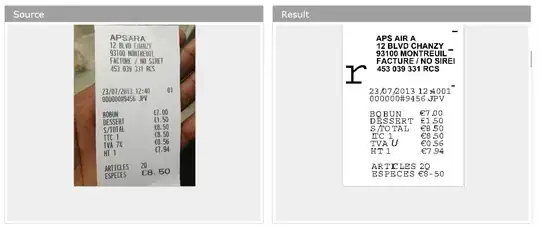

I am trying to interact with tesseract API also I am new to image processing and I am just struggling with it for last few days. I have tried simple algorithms and I have achieved around 70% accuracy.

I want its accuracy to be 90+%. The problem with the images is that they are in 72dpi. I also tried to increase the resolution but did not get good results the images which I am trying to be recognized are attached.

Any help would be appreciated and I am sorry if I asked something very basic.

EDIT

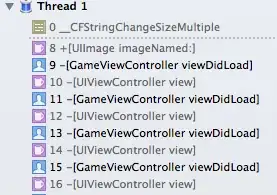

I forgot to mention that I am trying to do all the processing and recognition within 2-2.5 secs on Linux platform and method to detect the text mentioned in this answer is taking a lot of time. Also I prefer not to use command line solution but I would prefer Leptonica or OpenCV.

Most of the images are uploaded here

I have tried following things to binarize the tickets but no luck

- http://www.vincent-net.com/luc/papers/10wiley_morpho_DIAapps.pdf

- http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.193.6347&rep=rep1&type=pdf

- http://iit.demokritos.gr/~bgat/PatRec2006.pdf

- http://psych.stanford.edu/~jlm/pdfs/Sternberg67.pdf

Ticket contains

- little bit bad light

- Non-text area

- less resolution

I tried to feed the image direct to tesseract API and it is giving me 70% good results in 1 sec average. But I want to increase the accuracy in noticing the time factor So far I have tried

- Detect edges of the image

- Blob Analysis for blobs

- Binarized the ticket using adaptive thresholding

Then I tried to feed those binarized images to tesseract, the accuracy reduced to less than 50-60%, though binarized image look perfect.