For anyone using Emgu.CV or OpenCvSharp wrapper in .NET, there's a C# implement of Lars Schillingmann's answer:

Emgu.CV:

using Emgu.CV;

using Emgu.CV.CvEnum;

using Emgu.CV.Structure;

public static class MatExtension

{

/// <summary>

/// <see>https://stackoverflow.com/questions/22041699/rotate-an-image-without-cropping-in-opencv-in-c/75451191#75451191</see>

/// </summary>

public static Mat Rotate(this Mat src, float degrees)

{

degrees = -degrees; // counter-clockwise to clockwise

var center = new PointF((src.Width - 1) / 2f, (src.Height - 1) / 2f);

using var rotationMat = new Mat();

CvInvoke.GetRotationMatrix2D(center, degrees, 1, rotationMat);

var boundingRect = new RotatedRect(new(), src.Size, degrees).MinAreaRect();

rotationMat.Set(0, 2, rotationMat.Get<double>(0, 2) + (boundingRect.Width / 2f) - (src.Width / 2f));

rotationMat.Set(1, 2, rotationMat.Get<double>(1, 2) + (boundingRect.Height / 2f) - (src.Height / 2f));

var rotatedSrc = new Mat();

CvInvoke.WarpAffine(src, rotatedSrc, rotationMat, boundingRect.Size);

return rotatedSrc;

}

/// <summary>

/// <see>https://stackoverflow.com/questions/32255440/how-can-i-get-and-set-pixel-values-of-an-emgucv-mat-image/69537504#69537504</see>

/// </summary>

public static unsafe void Set<T>(this Mat mat, int row, int col, T value) where T : struct =>

_ = new Span<T>(mat.DataPointer.ToPointer(), mat.Rows * mat.Cols * mat.ElementSize)

{

[(row * mat.Cols) + col] = value

};

public static unsafe T Get<T>(this Mat mat, int row, int col) where T : struct =>

new ReadOnlySpan<T>(mat.DataPointer.ToPointer(), mat.Rows * mat.Cols * mat.ElementSize)

[(row * mat.Cols) + col];

}

OpenCvSharp:

OpenCvSharp already has Mat.Set<> method that functions same as mat.at<> in the original OpenCV, so we don't have to copy these methods from How can I get and set pixel values of an EmguCV Mat image?

using OpenCvSharp;

public static class MatExtension

{

/// <summary>

/// <see>https://stackoverflow.com/questions/22041699/rotate-an-image-without-cropping-in-opencv-in-c/75451191#75451191</see>

/// </summary>

public static Mat Rotate(this Mat src, float degrees)

{

degrees = -degrees; // counter-clockwise to clockwise

var center = new Point2f((src.Width - 1) / 2f, (src.Height - 1) / 2f);

using var rotationMat = Cv2.GetRotationMatrix2D(center, degrees, 1);

var boundingRect = new RotatedRect(new(), new(src.Width, src.Height), degrees).BoundingRect();

rotationMat.Set(0, 2, rotationMat.Get<double>(0, 2) + (boundingRect.Width / 2f) - (src.Width / 2f));

rotationMat.Set(1, 2, rotationMat.Get<double>(1, 2) + (boundingRect.Height / 2f) - (src.Height / 2f));

var rotatedSrc = new Mat();

Cv2.WarpAffine(src, rotatedSrc, rotationMat, boundingRect.Size);

return rotatedSrc;

}

}

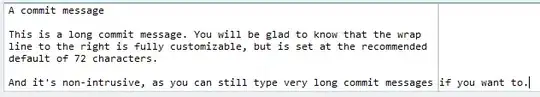

Also, you may want to mutate the src param directly instead of returning a new clone of it during rotation, for that you can just set the det param of WrapAffine() as the same with src: c++, opencv: Is it safe to use the same Mat for both source and destination images in filtering operation?

CvInvoke.WarpAffine(src, src, rotationMat, boundingRect.Size);

This is being called as in-place mode: https://answers.opencv.org/question/24/do-all-opencv-functions-support-in-place-mode-for-their-arguments/

Can the OpenCV function cvtColor be used to convert a matrix in place?