I'm attempting to work with a depth sensor to add positional tracking to the Oculus Rift dev kit. However, I'm having trouble with the sequence of operations producing a usable result.

I'm starting with a 16 bit depth image, where the values sort of (but not really) correspond to millimeters. Undefined values in the image have already been set to 0.

First I'm eliminating everything outside a certain near and far distance by updating a mask image to exclude them.

cv::Mat result = cv::Mat::zeros(depthImage.size(), CV_8UC3);

cv::Mat depthMask;

depthImage.convertTo(depthMask, CV_8U);

for_each_pixel<DepthImagePixel, uint8_t>(depthImage, depthMask,

[&](DepthImagePixel & depthPixel, uint8_t & maskPixel){

if (!maskPixel) {

return;

}

static const uint16_t depthMax = 1200;

static const uint16_t depthMin = 200;

if (depthPixel < depthMin || depthPixel > depthMax) {

maskPixel = 0;

}

});

Next, since the feature I want is likely to be closer to the camera than the overall scene average, I update the mask again to exclude anything that isn't within a certain range of the median value:

const float depthAverage = cv::mean(depthImage, depthMask)[0];

const uint16_t depthMax = depthAverage * 1.0;

const uint16_t depthMin = depthAverage * 0.75;

for_each_pixel<DepthImagePixel, uint8_t>(depthImage, depthMask,

[&](DepthImagePixel & depthPixel, uint8_t & maskPixel){

if (!maskPixel) {

return;

}

if (depthPixel < depthMin || depthPixel > depthMax) {

maskPixel = 0;

}

});

Finally, I zero out everything that's not in the mask, and scale the remaining values to between 10 & 255 before converting the image format to 8 bit

cv::Mat outsideMask;

cv::bitwise_not(depthMask, outsideMask);

// Zero out outside the mask

cv::subtract(depthImage, depthImage, depthImage, outsideMask);

// Within the mask, normalize to the range + X

cv::subtract(depthImage, depthMin, depthImage, depthMask);

double minVal, maxVal;

minMaxLoc(depthImage, &minVal, &maxVal);

float range = depthMax - depthMin;

float scale = (((float)(UINT8_MAX - 10) / range));

depthImage *= scale;

cv::add(depthImage, 10, depthImage, depthMask);

depthImage.convertTo(depthImage, CV_8U);

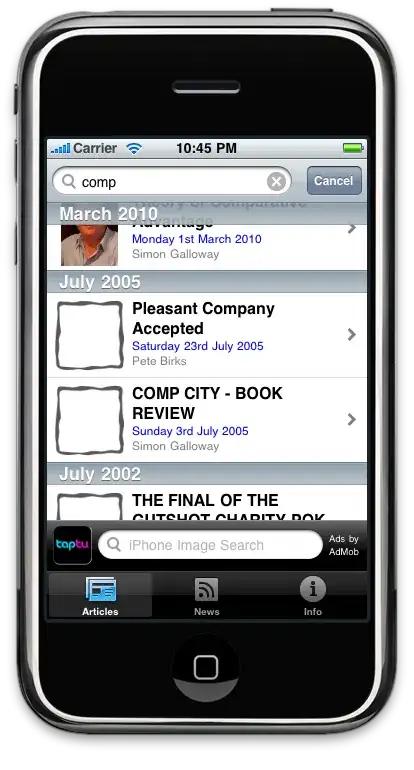

The results looks like this:

I'm pretty happy with this section of the code, since it produces pretty clear visual features.

I'm then applying a couple of smoothing operations to get rid of the ridiculous amount of noise from the depth camera:

cv::medianBlur(depthImage, depthImage, 9);

cv::Mat blurred;

cv::bilateralFilter(depthImage, blurred, 5, 250, 250);

depthImage = blurred;

cv::Mat result = cv::Mat::zeros(depthImage.size(), CV_8UC3);

cv::insertChannel(depthImage, result, 0);

Again, the features look pretty clear visually, but I wonder if they couldn't be sharpened somehow:

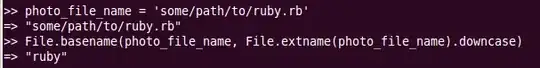

Next I'm using canny for edge detection:

cv::Mat canny_output;

{

cv::Canny(depthImage, canny_output, 20, 80, 3, true);

cv::insertChannel(canny_output, result, 1);

}

The lines I'm looking for are there, but not well represented towards the corners:

Finally I'm using probabilistic Hough to identify lines:

std::vector<cv::Vec4i> lines;

cv::HoughLinesP(canny_output, lines, pixelRes, degreeRes * CV_PI / 180, hughThreshold, hughMinLength, hughMaxGap);

for (size_t i = 0; i < lines.size(); i++)

{

cv::Vec4i l = lines[i];

glm::vec2 a((l[0], l[1]));

glm::vec2 b((l[2], l[3]));

float length = glm::length(a - b);

cv::line(result, cv::Point(l[0], l[1]), cv::Point(l[2], l[3]), cv::Scalar(0, 0, 255), 3, CV_AA);

}

This results in this image

At this point I feel like I've gone off the rails, because I can't find a good set of parameters for Hough to produce a reasonable number of candidate lines in which to search for my shape, and I'm not sure if I should be fiddling with Hough or looking at improving the outputs of the prior steps.

Is there a good way of objectively validating my results at each stage, as opposed to just fiddling with the input values until I think it 'looks good'? Is there a better approach to finding the rectangle given the starting image (and given that it won't necessarily be oriented in a particular direction?