Thread pool how when and who used:

First off when we use/install Node on a computer, it starts a process among other processes which is called node process in the computer, and it keeps running until you kill it. And this running process is our so-called single thread.

So the mechanism of single thread it makes easy to block a node application but this is one of the unique features that Node.js brings to the table. So, again if you run your node application, it will run in just a single thread. No matter if you have 1 or million users accessing your application at the same time.

So let's understand exactly what happens in the single thread of nodejs when you start your node application. At first the program is initialized, then all the top-level code is executed, which means all the codes that are not inside any callback function (remember all codes inside all callback functions will be executed under event loop).

After that, all the modules code executed then register all the callback, finally, event loop started for your application.

So as we discuss before all the callback functions and codes inside those functions will execute under event loop. In the event loop, loads are distributed in different phases. Anyway, I'm not going to discuss about event loop here.

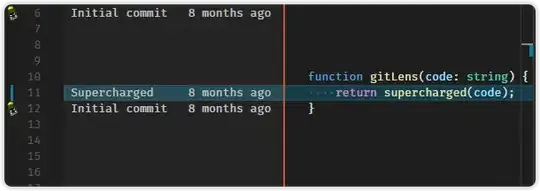

Well for the sack of better understanding of Thread pool I a requesting you to imagine that in the event loop, codes inside of one callback function execute after completing execution of codes inside another callback function, now if there are some tasks are actually too heavy. They would then block our nodejs single thread. And so, that's where the thread pool comes in, which is just like the event loop, is provided to Node.js by the libuv library.

So the thread pool is not a part of nodejs itself, it's provided by libuv to offload heavy duties to libuv, and libuv will execute those codes in its own threads and after execution libuv will return the results to the event in the event loop.

Thread pool gives us four additional threads, those are completely separate from the main single thread. And we can actually configure it up to 128 threads.

So all these threads together formed a thread pool. and the event loop can then automatically offload heavy tasks to the thread pool.

The fun part is all this happens automatically behind the scenes. It's not us developers who decide what goes to the thread pool and what doesn't.

There are many tasks goes to the thread pool, such as

-> All operations dealing with files

->Everyting is related to cryptography, like caching passwords.

->All compression stuff

->DNS lookups